Computational Complexity Review

1. Turing Machines @ Automata by Jeff Ullman

1.1 Introduction

Questions:

- How to show that certain tasks are impossible, or in some cases, possible but intractable?

- Intractable means solvable but only by very slow algorithms

- What can be solved by computer algorithms?

Turing machine (TM):

- In some sense an ultimate automaton.

- A formal computing model that can compute anything we can do with computer or with any other realistic model that we might think of as computing.

- TMs define the class of recursively enumerable languages which thus the largest class of languages about which we can compute anything.

- There is another smaller class called recursive languages that can be thought of as modeling algorithms–computer programs that answer a particular question and then finish.

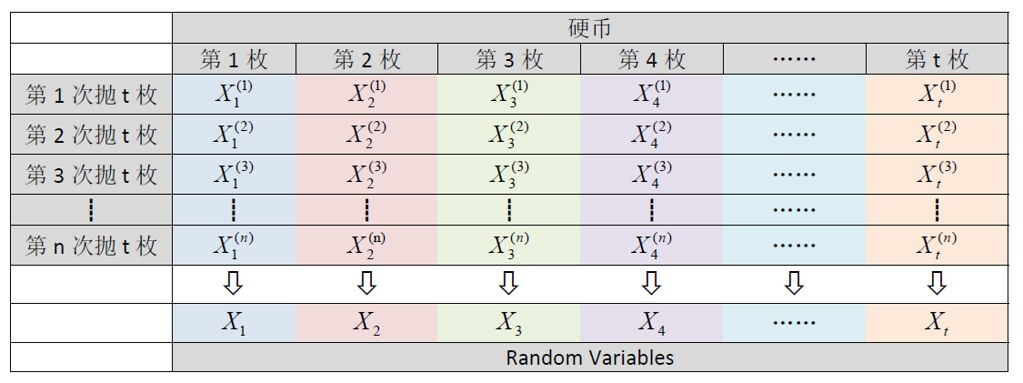

1.2 Data

Data types:

int,float,string, etc.- But at another level, there is only one type $\Rightarrow$

string of bit.- It is more convenient to think of binary strings as integers.

- Programs are also strings and thus strings of bits.

- The fact that programs and data are at heart the same thing is what let us build a powerful theory about what is not computable.

“The $i^{th}$ data”:

- Integers $\Rightarrow 1, 2, 3, \dots$

- “Data” can also be mapped to $1, 2, 3, \dots$ (with some tricks from coding or cryptography)

- Then it makes sense to talk about “the $i^{th}$ data”

- E.g. “the $i^{th}$ string”:

- Text strings (ASCII or Unicode) $\Rightarrow$ Binary strings $\overset{\textrm{encode}}{\Rightarrow}$ Integers

- A small glitch: If you think simply of binary integers, then

- $101$ $\Rightarrow$ “the $5^{th}$ string”

- $0101$ $\Rightarrow$ “the $5^{th}$ string”

- $00101$ $\Rightarrow$ “the $5^{th}$ string”

- A small glitch: If you think simply of binary integers, then

- This can be fixed by prepending a $1$ to the string before converting to an integer. Thus,

- $101$ $\Rightarrow$ “the $13^{th}$ string” ($\color{red}{1}101 = 13$)

- $0101$ $\Rightarrow$ “the $21^{st}$ string” ($\color{red}{1}0101 = 21$)

- $00101$ $\Rightarrow$ “the $37^{th}$ string” ($\color{red}{1}00101 = 37$)

- Text strings (ASCII or Unicode) $\Rightarrow$ Binary strings $\overset{\textrm{encode}}{\Rightarrow}$ Integers

- E.g. “the $i^{th}$ proof”:

- A formal proof is a sequence of logical expressions.

- We can encode mathematical expressions of any kind in Unicode.

- Convert expression to a binary string and then an integer.

- Problems:

- A proof is a sequence of expressions, so we need a way to separate them.

- Also, we need to indicate which expressions are given and which follow from previous expressions.

- Quick-and-dirty way to introduce special symbols into binary strings:

- Given a binary string, precede each bit by 0. E.g.:

- $101$ becomes $010001$.

- Use strings of two or more $1$’s as the special symbols. E.g.:

- Let $111$ mean “start of expression”

- Let $11$ mean “end of expression”

- Given a binary string, precede each bit by 0. E.g.:

- E.g. $\color{blue}{111}\color{red}{0}\underline{1}\color{red}{0}\underline{0}\color{red}{0}\underline{1}\color{blue}{11}\color{cyan}{111}\color{red}{0}\underline{0}\color{red}{0}\underline{0}\color{red}{0}\underline{1}\color{red}{0}\underline{1}\color{cyan}{11} = \underline{101},\underline{0011}$

- A formal proof is a sequence of logical expressions.

1.3 Orders of infinity

- There aren’t more “data” than there are integers.

- While the number is infinite, you may aware that there are different orders of infinity, and the integers is of the smallest one.

- E.g. there are more real numbers than there are integers, so there are more real numbers than there are “programs” (think of programs as data).

- This immediately tells you that some real numbers cannot be computed by programs.

- E.g. there are more real numbers than there are integers, so there are more real numbers than there are “programs” (think of programs as data).

1.3.1 Finite sets

- A finite set has a particular integer that is the count of the number of members–the cardinality.

- E.g. $\operatorname{card}(\lbrace a, b, c \rbrace) = 3$

- It is impossible to find a 1-to-1 mapping between a finite set and a proper subset of itself.

1.3.2 Infinite Sets

- Formally, an infinite set is a set for which there is a 1-to-1 mapping between itself and a proper subset of itself.

- E.g. the positive integers $\lbrace 1, 2, 3, \dots \rbrace$ is an infinite set.

- There is a 1-to-1 mapping $1 \mapsto 2, 2 \mapsto 4, 3 \mapsto 6, \dots$ between this set and a proper subset (the set of even integers).

- E.g. the positive integers $\lbrace 1, 2, 3, \dots \rbrace$ is an infinite set.

1.3.3 Countable Sets

- A countable set is a set with a 1-to-1 mapping with the positive integers.

- $\Rightarrow$ Hence, all countable sets are infinite.

- E.g. set of all integers:

- $0 \mapsto 1$

- $-x \mapsto 2x$

- $+x \mapsto 2x+1$

- Thus, order is $0, -1, 1, -2, 2, -3, 3,…$, i.e.

- $1^{st}$ integer is $0$

- $2^{nd}$ integer is $-1$

- etc.

- E.g. set of binary strings, set of Java programs.

- E.g. pairs of integers

- Order the pairs of positive integers first by sum, then by first component: $[1,1], [2,1], [1,2], [3,1], [2,2], [1,3], [4,1], [3,2], \dots, [1,4], [5,1], \dots$

- $[i,j] \mapsto f(i,j) = \frac{(i+j)(i+j-1)}{2} - i + 1 = \frac{(i+j-1)(i+j-2)}{2} + j$

1.3.4 Enumerations

- An enumeration of a set is a 1-to-1 mapping between the set and the positive integers.

- Thus, we have seen enumerations for strings, programs, proofs, and pairs of integers.

1.3.5 Set of all languages over some fixed alphabet

Question: Are the languages over $\lbrace 0,1 \rbrace$ countable?

Answer: No. Proof by Diagonalization (a table of $m$ strings $\times$ $n$ languages):

Suppose we could enumerate all languages over $\lbrace 0,1 \rbrace$ and talk about “the $i^{th}$ language.”

Consider the language:

\[L = \lbrace w \vert w \, \text{is the} \, i^{th} \, \text{binary string and} \, w \, \text{is not in the} \, i^{th} \, \text{language} \, \rbrace\]Clearly, $L$ is a language over $\lbrace 0,1 \rbrace$.

Thus, $L$ is the $j^{th}$ language for some particular $j$.

Let $x$ be the $j^{th}$ string. Is $x$ in $L$?

- If so, $x$ is not in $L$ by definition of $L$.

- If not, then $x$ is in $L$ by definition of $L$.

We have a contradiction: $x$ is neither in $L$ nor not in $L$, so our sole assumption (that there was an enumeration of the languages) is wrong. $\blacksquare$

This is really bad; there are more languages than programs:

- The set of all programs are countable

- The set of all languages are not countable

1.4 Turing-Machine

Turing-Machine Theory:

- One important purpose of the theory of Turing machines is to prove that certain specific languages have no membership algorithm.

- The first step is to prove certain languages about Turing machines themselves have no membership algorithm.

- Reductions are used to prove more common questions undecidable.

Picture of a Turing Machine:

- Finite number of states.

- Infinite tape with cells containing tape symbols chosen from a finite alphabet.

- Tape head: always points to one of the tape cells.

- Action: based on the state and the tape symbol under the head, a TM can

- change state

- overwrite the current symbol

- move the head one cell left or right

Why Turing Machines? Why not represent computation by C programs or something like that?

- You can develop a theory about programs without using Turing machines, but it is easier to prove things about TM’s, because they are so simple.

- And yet they are as powerful as any computer.

- In fact, TMs are more powerful than any computer, since they have infinite memory

- Yes, we could always buy more storage for a computer.

- But the universe is finite so where are you going to get the atoms from which to build all of those discs?

- However, once you accept that the universe is finite, the limitation doesn’t seem to be effecting what we can compute in practice.

- So we are not going to argue that a computer is weaker than a Turing machine in a meaningful way.

- In fact, TMs are more powerful than any computer, since they have infinite memory

1.4.1 Turing-Machine Formalism

A TM is typically described by:

- A finite set of states, $Q$

- An input alphabet, $\Sigma$

- symbol 和 alphabet 的关系是 $\text{symbol} \in \text{alphabet}$

- A tape alphabet, $\Gamma$

- $\Sigma \subseteq \Gamma$

- A transition function, $\delta$

- A start state, $q_0 \in Q$

- A blank symbol, $B \in \Gamma \setminus \Sigma$

- All tape except for the input is blank initially.

- $B$ is never an input symbol.

- A set of final states, $F \subseteq Q$

Conventions:

- $a, b, \dots$ are input symbols.

- $\dots, X, Y, Z$ are tape symbols.

- $\dots, w, x, y, z$ are strings of input symbols.

- $\alpha, \beta, \dots$ are strings of tape symbols.

Transition Function $\delta(q, Z) = (p, Y, D)$:

- Arguments:

- Current state $q \in Q$

- Current tape symbol $Z \in \Gamma$

- Return value (when $\delta(q, Z)$ in not undefined):

- $p$ is a state

- $Y$ is the new tape symbol (to replace the current symbol on the tape head)

- $D$ is a direction, $L$ or $R$

TM Example:

- Description:

- This TM scans its input (all binary bits) left to right, looking for a 1.

- If it finds 1, it changes it to a 0, goes to final state $f$, and halts.

- If it reaches a blank, it changes it to a 1 and moves left.

- Formalism:

- States $Q = \lbrace q \, \text{(start)}, f \, \text{(final)} \rbrace$.

- Input symbols $\Sigma = \lbrace 0, 1 \rbrace$.

- Tape symbols $\Gamma = \lbrace 0, 1, B \rbrace$.

- $\delta(q, 0) = (q, 0, R)$.

- $\delta(q, 1) = (f, 0, R)$.

- $\delta(q, B) = (q, 1, L)$.

- Example tape: $BB00BB$; starts from the first 0

- $BB\color{red}{0}0BB$, $\delta(q, 0) = (q, 0, R)$, move right

- $BB0\color{red}{0}BB$, $\delta(q, 0) = (q, 0, R)$, move right

- $BB00\color{red}{B}B$, $\delta(q, B) = (q, 1, L)$, move left

- $BB0\color{red}{0}1B$, $\delta(q, 0) = (q, 0, R)$, move right

- $BB00\color{red}{1}B$, $\delta(q, 1) = (f, 0, R)$, move right

- $BB000\color{red}{B}$, undefined, halt.

Instantaneous Descriptions of a Turing Machine:

- Initially, a TM has a tape consisting of a string of input symbols surrounded by an infinity of blanks in both directions.

- The TM is in the start state, and the head is at the leftmost input symbol.

- TM ID’s: (我觉得更合适的名字应该叫 TM State ID)

- An ID is a string $\alpha q \beta$, where

- $\alpha =$ string from the leftmost nonblank to tape head (exclusive)

- $\beta =$ string from the tape head (inclusive) to the rightmost nonblanks.

- I.e. the symbol to the right of $q$ is the one being scanned.

- If an ID is in the form of $\alpha q$, it is scanning a $B$.

- As for PDA’s (Pushdown automaton) we may use symbols

- $ID_i \vdash ID_j$ to represent “$ID_i$ becomes $ID_j$ in one move” and

- $ID_i \vdash^{\star} ID_j$ to represent “$ID_i$ becomes $ID_j$ in zero or more moves,”。

- Example: The moves of the previous TM are $q00 \vdash 0q0 \vdash 00q \vdash 0q01 \vdash 00q1 \vdash 000f$

- An ID is a string $\alpha q \beta$, where

- Formal Definition of Moves:

- If $\delta(q, Z) = (p, Y, R)$, then

- $\alpha qZ \beta \vdash \alpha Yp \beta$

- If $Z$ is the blank $B$, then $\alpha q \vdash \alpha Yp$

- If $\delta(q, Z) = (p, Y, L)$, then

- For any $X$, $\alpha XqZ \beta \vdash \alpha pXY \beta$

- In addition, $qZ \beta \vdash pBY \beta$

- If $\delta(q, Z) = (p, Y, R)$, then

1.4.2 Languages of a TM

There are actually two ways to define the language of a TM:

- A TM defines a language by final state.

- $L(M) = \lbrace w \vert q_0 w \vdash^{\star} I, \, \text{where I is an ID with a final state} \rbrace$

- Or, a TM can accept a language by halting.

- $H(M) = \lbrace w \vert q_0 w \vdash^{\star} I, \, \text{and there is no move possible from ID} \, I \rbrace$

Equivalence of Accepting and Halting:

- If $L = L(M)$, then there is a TM $M’$ such that $L = H(M’)$.

- If $L = H(M)$, then there is a TM $M”$ such that $L = L(M”)$.

Proof:

Final State => Halting:

- Modify $M$ to become $M’$ as follows:

- For each final state of $M$, remove any moves, so $M’$ halts in that state.

- Avoid having $M’$ accidentally halt.

- Introduce a new state $s$, which runs to the right forever; that is $\delta(s, X) = (s, X, R)$ for all symbols $X$.

- If $q$ is not a final state, and $\delta(q, X)$ is undefined, let $\delta(q, X) = (s, X, R)$.

Halting => Final State:

- Modify $M$ to become $M”$ as follows:

- Introduce a new state $f$, the only final state of $M”$.

- $f$ has no moves.

- If $\delta(q, X)$ is undefined for any state $q$ and symbol $X$, define it by $\delta(q, X) = (f, X, R)$. $\blacksquare$

Recursively Enumerable Languages:

- We now see that the classes of languages defined by TM’s using final state and halting are the same.

- This class of languages (that can be defined in both those ways) is called the recursively enumerable languages.

- Why this name? The term actually predates the Turing machine and refers to another notion of computation of functions.

Recursive Languages:

- A proper subset of Recursively Enumerable Languages.

- An algorithm formally is a TM accepting (a language) by final state that halts on any input regardless of whether that input is accepted or not.

- 注意这里的逻辑,algorithm 是 TM 而不是 language.

- If $L = L(M)$ for some TM $M$ that is an algorithm, we say $L$ is a recursive language.

- In other words, the language that is accepted by final state by some algorithm is a recursive language.

- Why this name? Again, don’t ask; it is a term with a history.

- Example: Every CFL (Context-free language) is a recursive language.

- By the CYK algorithm.

2. Decidability @ Automata by Jeff Ullman

Central Ideas:

- TMs can be enumerated, so we can talk about “the $i^{th}$ TM”.

- Thus possible to diagonalize over TMs, showing a language that cannot be the language of any TM.

- Establish the principle that a problem is really a language.

- Therefore some specific problems do not have TMs.

Binary-Strings from TM’s:

- We shall restrict ourselves to TM’s with input alphabet $\lbrace 0, 1 \rbrace$.

- Assign positive integers to the three classes of elements involved in moves:

- States: $q_1$ (start state), $q_2$ (final state), $q_3$, etc.

- Symbols: $X_1$ (0), $X_2$ (1), $X_3$ (blank), $X_4$, etc.

- Directions: $D_1$ (L) and $D_2$ (R).

- Suppose $\delta(q_i, X_j) = (q_k, X_l, D_m)$.

- Represent this rule by string $0^i10^j10^k10^l10^m$. ($0^n$ 表示 $n$ 个连续的 $0$)

- Key point: since integers $i, j, \dots$ are all $> 0$, there cannot be two consecutive $1$’s in these strings.

- Represent a TM by concatenating the codes for each of its moves, separated by $11$ as punctuation.

- That is: $\text{Code}_111\text{Code}_211\text{Code}_311\dots$

- Note: if bianry string $i$ cannot be parsed as a TM, assume the $i^{th}$ TM accepts nothing.

Diagonalization:

- Table of Acceptance (denoted by $A$):

- TM $i=1,2,3,\dots$ $\times$ String $j=1,2,3,\dots$

- $A_{ij} = 0$ means the $i^{th}$ TM does not accept the $j^{th}$ string.

- $A_{ij} = 1$ otherwise.

- Construct a 0/1 sequence $D=d_1 d_2 \dots$, where $d_i = \overline{A_{ii}}$

- $D$ cannot be a row in $A$.

- Question: Let’s suppose $w=10101010\dots$. What does it mean if $w$ appears in the $i^{th}$ row of the table $A$?

- Answer: It means the $i^{th}$ TM exactly accepts the $1^{st}, 3^{rd}, 5^{th}, \dots$ binary strings.

- Let’s give a name to this language–the diagonalization language $L_d = \lbrace w \vert w \text{ is the } i^{th} \text{ string, and the } i^{th} \text{ TM does not accept } w \rbrace$.

- We have shown that $L_d$ is not a recursively enumerable language; i.e., it has no TM.

- There are no more TMs than integers.

- $L_d$ exists but we cannot always tell whether a given binary string $w$ is in $L_d$.

Problems:

- Informally, a “problem” is a yes/no (the output) question about an infinite set of possible instances (the input).

- Example: “Does graph G have a Hamilton cycle (cycle that touches each node exactly once)?

- Each undirected graph is an instance of the “Hamilton-cycle problem.”

- Formally, a problem is a language.

- Each string encodes some instance.

- The string is in the language if and only if the answer to this instance of the problem is “yes.”

- Example: A Problem About Turing Machines

- We can think of the language $L_d$ as a problem.

- 如果 table $A$ 的 string 是 TM 自身的 binary string 的话,这个 problem 就成了 “Does this TM not accept its own code?”

Decidable Problems:

- A problem is decidable if there is an algorithm to answer it.

- Recall: An “algorithm,” formally, is a TM that halts on all inputs, accepted or not.

- Put another way, “decidable problem” = “recursive language.”

- Otherwise, the problem is undecidable.

Bullseye Picture:

- 最中心的集合:Decidable problems = Recursive languages

- 外一圈:Recursively enumerable languages

- Languages accepted by TMs with no guarantee that they will halt on inputs they never accept

- Recursively enumerable languages $\supset$ Recursive languages

- 最外层:Not recursively enumerable languages,比如 $L_d$

- Languages that have no TM at all.

- Recursively enumerable languages 的补集

Examples: Real-world Undecidable Problems

- Can a particular line of code in a program ever be executed?

- Is a given context-free grammar ambiguous?

- Do two given CFG’s generate the same language?

The Universal Language:

- An example of a recursively enumerable, but not recursive language

- We call it $L_u$, of a universal Turing machine, or call it Universal TM language.

- UTM inputs include:

- a binary string representing some TM $M$, and

- a binary string $w$ for $M$

- UTM accepts $\langle M,w \rangle$ if and only if $M$ accepts $w$.

- E.g. JVM

- JVM takes a coded Java program and input for the program and executes the program on the input.

Designing the UTM:

- Inputs are of the form: $\text{Code–for–}M \, 111 \, w$

- Note: A valid TM code never has $111$, so we can split $M$ from $w$.

- The UTM must accept its input if and only if $M$ is a valid TM code and $M$ accepts $w$.

- UTM is a multi-tape machine:

- Tape 1 holds the input $\text{Code–for–}M \, 111 \, w$

- Tape 1 is never changed, i.e. never overwritten.

- Tape 2 represents the current tape of $M$ during the simulation of $M$ with input $w$

- Tape 3 holds the state of $M$.

- Tape 1 holds the input $\text{Code–for–}M \, 111 \, w$

- Step 1: The UTM checks that $M$ is a valid code for a TM.

- E.g., all moves have five components, no two moves have the same state/symbol as first two components.

- If $M$ is not valid, its language is empty, so the UTM immediately halts without accepting.

- Step 2: Assuming the code for $M$ is valid, the UTM next examines $M$ to see how many of its own tape cells it needs to represent one symbol of $M$.

- How to do this: we discover the longest block of $0$s representing a tape symbol and add one cell to that for a marker (e.g. $\text{#}$) between symbols of $M$’s tape.

- Thus if say $X_7$ is the highest numbered symbol then we’ll use 8 squares to represent one symbol of $M$.

- Symbol $X_i$ will be represented $i$ $0$s and $7-i$ blanks followed by a marker outside.

- For example, here’s how we would represent $X_5$: $00000BB\text{#}$.

- Step 3: Initialize Tape 2 to represent the tape of $M$ with input $w$, and initialize Tape 3 to hold the start state (always $q_1$ so it is represented by a single $0$).

- Step 4: Simulate $M$.

- Look for a move on Tape 1 that matches the state on Tape 3 and the tape symbol under the head on Tape 2.

- If we cannot find one then apparently $M$ halts without accepting $w$ so UTM does so as well.

- If found, change the symbol and move the head on Tape 2 and change the State on Tape 3.

- If $M$ accepts (i.e. halts), the UTM also accepts (i.e. halts).

- Look for a move on Tape 1 that matches the state on Tape 3 and the tape symbol under the head on Tape 2.

Proof That $L_u$ is Recursively Enumerable (RE), but not Recursive:

- We designed a TM for $L_u$, so it is surely RE.

- Proof by contradiction:

- Suppose $L_u$ were recursive, which means we could design a UTM $U$ that always halted.

- Then we could also design an algorithm for $L_d$, as follows.

- Given input $w$, we can decide if $w \in L_d$ by the following steps.

- Check that $w$ is a valid TM code.

- If not, then $w$’s language is empty, so $w \in L_d$.

- If valid, use the hypothetical algorithm to decide whether $w \, 111 \, w$ is $\in L_u$.

- If so, then $w \notin L_d$; else $w \in L_d$.

- Check that $w$ is a valid TM code.

- Given input $w$, we can decide if $w \in L_d$ by the following steps.

- But we already know there is no algorithm for $L_d$.

- Thus, our assumption that there was an algorithm for $L_u$ is wrong.

- $L_u$ is RE, but not recursive.

$\blacksquare$

这个证明需要好好解读与总结:

- 什么是 language?

- TM $M$ 的 language $L_M = \lbrace \text{tape (不算两端的 blank 区域)} \vert \text{TM 能从这个 tape 的起始点一直运行到一个 final state, i.e. halt} \rbrace$

- Tape 的本质是什么?是 string 呀

- 我们说 TM $M$ accepts a language,那么同样也可以说 TM $M$ accepts a string,然后所有 $M$ accept 的 string 构成了 $M$ 的 language

- 什么是 algorithm?

- algorithm 是个 TM, which always halts on any input regardless of whether that input is accepted or not

- always halts 是什么意思?你可以理解为 “always generate an output”

- accept 输出 1

- reject 输出 0

- 明显,infinite loop 不可能是一个 algorithm

- always halts 是什么意思?你可以理解为 “always generate an output”

- algorithm 是个 TM, which always halts on any input regardless of whether that input is accepted or not

- 什么是 problem?

- problem is a language

- TM accept a language => algorithm accepts a problem

- 但是我们一般不这么说,因为我们常用的说法是 algorithm solves a problem

- 反过来,a problem is solved by algorithm,也就相当于 “我们可以为 problem 这个 language 找到一个 always halt 的 TM,即找到一个 algorithm”

- 能找到 algorithm 的 problem => Decidable Problem, i.e. Recursive language

- 能找到 TM (但不保证是 algorithm) 的 problem => Recursively enumerable language

- 能找到 TM,但确定不是 algorithm 的 problem => The Universal Language

- 连 TM 都找不到的 problem => Not recursively enumerable language

- $w$ 的两面性

- 如果 TM $M$ 的 binary string 是 $w$,那么

- $w$ 可以表示 TM $M$

- $w$ 可以表示一个 plain string

- 那么我们可以同时有 “$w$ accepts $w$” 和 “$w$ is accepted by $w$” 这两种逻辑

- 如果 TM $M$ 的 binary string 是 $w$,那么

- $L_d$ 的两种解读

- 首先它是个 language,然后 language 可以看做一个集合;然后 problem 也是 language,所以也把 $L_d$ 抽象成一个问题描述

- 貌似默认是把 Acceptance table $A$ 的 string 限定为 TM 的 binary string 的话(相当于是 $w \times w$ 这样的一张表),那么:

- $L_d = \lbrace w \vert \text{TM } w \text{ does not accept string } w \rbrace$

- 你理解为 string 的集合或者 TM 的集合都行,因为 $w$ 的两面性

- 原来的定义是 $L_d = \lbrace w \vert w \text{ is the } i^{th} \text{ string, and the } i^{th} \text{ TM does not accept } w \rbrace$,请自行体会一下是不是可以这么理解

- $L_d$ is a problem “Does this TM not accept its own code?”

- $L_d = \lbrace w \vert \text{TM } w \text{ does not accept string } w \rbrace$

- If $w$ is not a valid TM code:

- 有点无赖地,我们把这样的 $w$ 看做一种特殊的 TM

- $w$’s language is empty

- => $w$ accepts nothing

- => $w$ cannot accept $w$

- => $w \in L_d$

- => $w$ cannot accept $w$

- => $w$ accepts nothing

- $L_u$ 没有 algorithm 意味着什么?

- 给定一个 $\langle M, w \rangle$,没有 algorithm 确定是否有 $M$ accepts $w$

- 还是回到 “$w$ accepts $w$” 这个逻辑上,然后我们可以把 $\langle w, w \rangle$ 写成 $w \, 111 \, w$,所以针对这个输入,没有 algorithm 可以确定是否有 $w$ accepts $w$

3. Extensions and properties of Turing machines @ Automata by Jeff Ullman

17.1 Programming Tricks

Programming Trick: Multiple Tracks

- Enable us to leave markers on the tape so TM can find their way back to an important place.

- If there are $k$ tracks => Treat each symbol as a vector of size $k$

- E.g. $k=3$. $\left( \begin{smallmatrix} 0 \newline 0 \newline 0 \end{smallmatrix} \right)$ represents symbol $0$; $\left( \begin{smallmatrix} B \newline B \newline B \end{smallmatrix} \right)$ represents blank; $\left( \begin{smallmatrix} X \newline Y \newline Z \end{smallmatrix} \right)$ represents symbol $[X,Y,Z]$

Programming Trick: Marking

- $k-1$ tracks for data; 1 track for marks

- Almost all tape cells hold blank in this mark track, but several hold special symbols (marks) that allow the TM to find particular places on the tape.

- E.g. $\left( \begin{smallmatrix} B & X & B \newline W & Y & Z \end{smallmatrix} \right)$ represents an unmarked $W$, a marked $Y$ and an unmarked $Z$ ($X$ is a mark)

Programming Trick: Caching in the State

- Treat state as a vector.

- First component is the “control state,” i.e. the orginal state

- Other components are used as a cache to hold values the TM needs to remember.

例子待续

17.2 Restrictions

Semi-infinite Tape: 待续

17.3 Extensions

Multitape TM:

- To simulate a $k$-Tape TM, use $2 \times k$ tracks ($2 \times k$ tracks 仍然视为一个 tape;$k$-Tape 是要有 $k$ 个 head 的)

- For every 2 tracks simulating 1 tape:

- 1 track for tape data

- 1 track for tape head (use mark $X$ to indicate the head position)

- 待续

Nondeterministic TM:

- Allow TM to have more than 1 choice of move at each step for any <state, symbol> pair.

- Each choice is a <state,symbol,direction> triple, as for the deterministic TM.

- Simulating a NTM by a DTM: 待续

How a TM can simulate a

- Very large

stores => a significant factor in big-data world, like google's big table. - 待续

17.4 Closure Properties of Recursive and RE Languages

待续

4. Specific undecidable problems

Rice’s theorem: tells us that almost every question we can ask about the recursively enumerable languae is undecidable.

Properties of Languages:

- Any set of languages is a property of languages.

- Example: The infiniteness property is the set of infinite languages.

- If a language $L$ “has property infiniteness”, it means “$L \in \text{ property infiniteness}$”

- In what follows, we’ll focus on properties of RE languages, because we can’t represent other languages by TM’s.

- We shall think of a property as a problem about Turing machines.

- 我们可以认为 property $P = \lbrace L \vert L \text{ has property } P \rbrace$。从这个角度看,property 是一个关于 languages 的 language;进一步,property 是一个 problem。

- 这个 problem 可以描述为:Given a language, does it have property $P$?

- 那尽然 property 是一个 problem,我们就可以说 “property is decidable/undecidable”

- 又因为 $L$ 可以由 TM 产生,所以我们进一步定义:For any property $P$, we can define a language $L_P$, the set of binary strings for TM $M$ such that $L(M)$ has property $P$.

- 简单说就是 $L(M) \in P \Rightarrow M \in L_P$

- 我们可以把 $L_P$ 视为这样的一个 problem:Given a code for a TM, does it define a language with that property $P$?

- 我们可以认为 property $P = \lbrace L \vert L \text{ has property } P \rbrace$。从这个角度看,property 是一个关于 languages 的 language;进一步,property 是一个 problem。

- There are two (trivial) properties $P$ for which $L_P$ is decidable.

- The always-false property, which contains no RE languages.

- E.g. “this language is not RE.”

- How do we decide this property? I.e. the algorithm for this property is: Given an input $w$, ignore it and say no (reject).

- Empty language is a RE language.

- The always-true property, which contains every RE language.

- E.g. “this language is RE.”

- How do we decide this property? I.e. the algorithm for this property is: Given an input $w$, ignore it and say yes (accept).

- The always-false property, which contains no RE languages.

- Rice’s Theorem: For every other (i.e. non-trivial) property $P$, $L_P$ is undecidable.

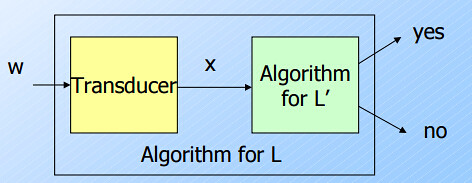

Reductions:

- A reduction from language $L$ to language $L’$ is an algorithm (TM that always halts) that takes a string $w$ and converts it to a string $x$, with the property that: $x$ is in $L’$ if and only if $w$ is in $L$.

- The value of having such a reduction is that it tells us $L$ is no harder than $L’$, at least as far as decidability is concerned.

- TM’s as Transducers

- We have regarded TM’s as acceptors of strings.

- But we could just as well visualize TM’s as having an output tape, where a string is written prior to the TM halting.

- If we reduce $L$ to $L’$, and $L’$ is decidable, then the algorithm for $L$ + the algorithm of the reduction shows that $L$ is also decidable.

- Normally used in the contrapositive (逆否命题).

- If we know $L$ is not decidable, then $L’$ cannot be decidable.

- More powerful forms of reduction: if there is an algorithm for $L’$, then there is an algorithm for $L$.

- E.g. we reduced $L_d$ to $L_u$

- More in NP-completeness discussion on Karp vs. Cook reductions

- The simple reduction is called a Karp reduction.

- More general kinds of reductions are called Cook reductions.

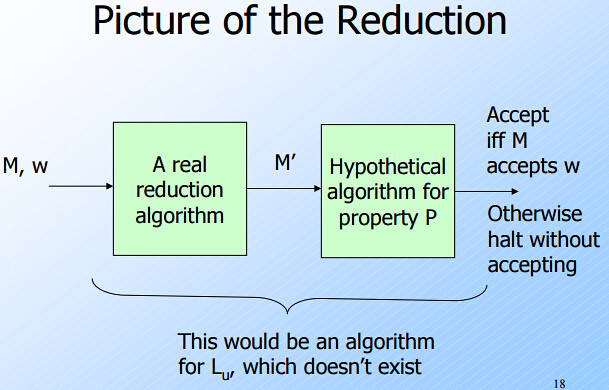

Proof of Rice’s Theorem

- Proof Skeleton

- We shall show that for every nontrivial property $P$ of the RE languages, $L_P$ is undecidable.

- We show how to reduce $L_u$ to $L_P$.

- Since we know $L_u$ is undecidable, it follows that $L_P$ is also undecidable.

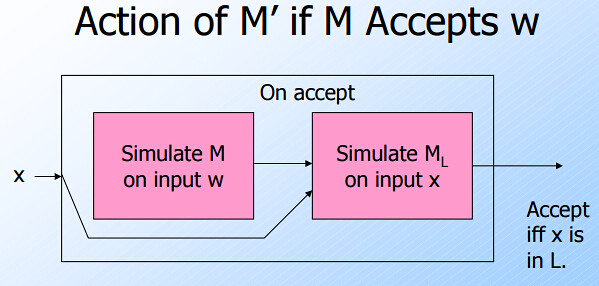

- The Reduction

- The input to $L_u$, is a TM $M$ and an input $w$ for $M$. Then our reduction algorithm produces the code for a TM $M’$.

- Define property $P = “M \text{ accepts } w”$.

- Thus $L(M’)$ has property $P$ if and only if $M$ accepts $w$.

- “$L(M’)$ has property $P$” 也就意味着 “$M’$ accepts a language with property $P$”

- That is $M’ \in L_P$ if and only if $\langle M,w \rangle \in L_u$.

- Thus $L(M’)$ has property $P$ if and only if $M$ accepts $w$.

- $M’$ has two tapes, used for:

- Simulates another TM $M_L$ on $M’$’s own input, say $x$

- The transducer (which in fact is $M$) does not deal with or see $x$

- Simulates $M$ on $w$.

- Note: neither $M$, $M_L$, nor $w$ is input to $M’$.

- Simulates another TM $M_L$ on $M’$’s own input, say $x$

- Assume that the empty language $\emptyset$ does not have property $P$.

- If it does, consider the complement of $P$, say $Q$. $\emptyset$ then has property $Q$.

- If we could prove that $Q$ are undecidable, then $P$ must be undecidable. That is if $L_P$ were a recursive language, then so would be $L_Q$ since the recursive languages are closed under complementation.

- Let $L$ be any language with property $P$, and let $M_L$ be a TM that accepts $L$.

- Design of $M’$

- On the second tape, $M’$ writes $w$ and then simulate $M$ on $w$.

- If $M$ accepts $w$, then simulate $M_L$ on the input $x$ on the first tape.

- $M’$ accepts its input $x$ if and only if $M_L$ accepts $x$, i.e. $x \in L$.

- If $M$ does not accept $w$, $M’$ never gets to the stage where it simulate $M_L$, and therefore $M’$ accept nothing.

- In this case, $M’$ defines an empty language, which does not have property $P$.

- Suppose $M$ accepts $w$.

- Then $M’$ simulates $M_L$ and therefore accepts $x$ if and only if $x$ is in $L$.

- That is, $L(M) = L$, $L(M’)$ has property $P$, and $M’ \in L_P$.

- Suppose $M$ does not accept $w$.

- Then $M’$ never starts the simulation of $M_L$, and never accepts its input $x$.

- Thus, $L(M’) = \emptyset$, and $L(M’)$ does not have property $P$.

- That is, $M’ \not \in L_P$.

- Thus, the algorithm that converts $M$ and $w$ to $M’$ is a reduction of $L_u$ to $L_P$.

- Thus, $L_P$ is undecidable.

5. Turing Machines @ Erickson §6

6.1 Why Bother?

Admittedly, Turing machines are a terrible model for thinking about fast computation; simple operations that take constant time in the standard random-access model can require arbitrarily many steps on a Turing machine. Worse, seemingly minor variations in the precise definition of “Turing machine” can have significant impact on problem complexity. As a simple example (which will make more sense later), we can reverse a string of n bits in $O(n)$ time using a two-tape Turing machine, but the same task provably requires $\Omega(n^2)$ time on a single-tape machine.

But here we are not interested in finding fast algorithms, or indeed in finding algorithms at all, but rather in proving that some problems cannot be solved by any computational means. Such a bold claim requires a formal definition of “computation” that is simple enough to support formal argument, but still powerful enough to describe arbitrary algorithms. Turing machines are ideal for this purpose. In particular, Turing machines are powerful enough to simulate other Turing machines, while still simple enough to let us build up this self-simulation from scratch, unlike more complex but efficient models like the standard random-access machine.

(Arguably, self-simulation is even simpler in Church’s $\lambda$-calculus, or in Schönfinkel and Curry’s combinator calculus, which is one of many reasons those models are more common in the design and analysis of programming languages than Turing machines. Those models much more abstract; in particular, they are harder to show equivalent to standard iterative models of computation.)

6.2 Formal Definitions

- Three distinct special states $\lbrace \text{start}, \text{accept}, \text{reject} \in Q \rbrace$.

- A transition function $\delta: (Q \setminus \lbrace \text{start}, \text{reject} \rbrace) \times \Gamma \rightarrow Q \times \Gamma \times \lbrace −1,+1 \rbrace$.

7. Turing Machines @ Computational Complexity by Mike Rosulek

Languages and UTM (Lecture 1 & 2)

Language:

- A language is a subset of $\lbrace 0,1 \rbrace^\star$ (actually it could be any subset of $\lbrace 0,1 \rbrace^\star$)

- A language $L$ is a set of “yes-instances”

- $x$ is a “yes-instance” if $x \in L$

- Language $S$ is Turing-recognizable (“recursively enumerable / r.e.”) if $\exists$ TM $M$, such that $\forall x$

- $x \in S \Rightarrow M$ accepts $x$

- $x \not\in S \Rightarrow M$ rejects $x$ or runs forever

- Language $S$ is Turing-decidable (“recursive”) if $\exists$ TM $M$, such that $\forall x$

- $x \in S \Rightarrow M$ accepts $x$

- $x \not\in S \Rightarrow M$ rejects $x$

- An algorithm formally is a TM which

- halts on any input regardless of whether that input is accepted or not, and

- accepts (a language) by final state

- In other words, language $S$ is Turing-decidable (“recursive”) if $\exists$ an algorithm for $S$.

- If $M$ is a TM, define $L(M) = \lbrace x \vert M \text{ accepts } x \rbrace$

Programming conventions:

- Programming a TM for real is cruel!

- Describe a TM “program” in terms of tape modifications & head movements

- “Mark” cells on the tape (e.g., $a \rightarrow \acute{a}$)

E.g. TM algorithm for $\text{Palindromes} = \lbrace x \vert x = reverse(x) \rbrace$:

- “Mark” first char (e.g., $O \rightarrow \emptyset$), remember that char in internal state

- If char to the right is “marked” or blank, then accept; else scan right until blank or a “marked” char

- If $\text{prev char} \neq \text{remembered char}$, reject; else mark it

- Scan left to leftmost unmarked char; if no more unmarked chars, accept; else repeat from step #1

Universal Machines:

- TMs can be encoded as strings (“code is data”)

- Convention: every string encodes some TM

- $\langle M \rangle$: encoding of a TM $M$

- $\langle M,x \rangle$: encoding of a TM $M$ and a string $x$

- $L_{acc} = \lbrace \langle M,x \rangle \vert M \text{ is a TM that accepts } x \rbrace$ is Turing-recognizable (RE)

- Suppose there such a TM $U$ that accept $\langle M,x \rangle$, i.e. $U(\langle M,x \rangle) = \text{yes}$.

- $U \text{ accepts } \langle M,x \rangle \iff \langle M,x \rangle \in L_{acc}$.

- In other words, $U$ recognizes $L_{acc}$, i.e. $L(U) = L_{acc}$

- TM $U$ can recognizes $L_{acc}$, which means it can simulate any TM $M$! Thus call it a “universal TM”

Design of Universal TM:

- On input $\langle M,x \rangle$, use 3 tapes:

- one for description of $M$

- one for $M$’s work tape contents

- one for $M$’s current state

- Transitions: $\langle \text{state, char, newstate, newchar, direction} \rangle$

- Legal to say “simulate execution of $M$ on input $x$” in our TM pseudocode!

– If $M$ halts on $x$, the simulation will also halt

– “Simulate execution of $M$ on input $x$ for $t$ steps” also possible (always halts)

- Can simulate $t$ steps of a TM in $O(t \log t)$ steps

Diagonalization and Reduction (Lecture 2 & 3)

Diagonalization:

- $L_{acc} = \lbrace \langle M,x \rangle \vert M \text{ is a TM that accepts } x \rbrace$ is not Turing-decidable (“recursive”)

- Likewise, $L_{halt} = \lbrace \langle M,x \rangle \vert M \text{ is a TM that halts } x \rbrace$

- 这里的 $L_{acc}$ 即前面 Jeff Ullman 的 $L_u$

(Turing) Reductions:

- Motivation:

- Let’s not use diagonalization technique every time to prove something undecidable!

- Instead, “reduce” one problem to another.

- What does “Alg decides lang” mean?

- Language $L = \lbrace x \vert x \text{ is/satisfies blab blah blah} \rbrace$ is a collection of yes-instances.

- An $x$ that is/satisfies “blab blah blah” is a yes-instance.

- An algorithm is in nature a TM, so we can say “an algorithm $M$”.

- If we say “$M$ decides $L$”, it means:

- $L(M) = L$

- Imagine $M$ as a function,

- The datatype of the parameter to $M$ is the the one of the elements of $L$. E.g.

- if $L=\lbrace x \vert \dots \rbrace$, elements are $x$’s. Therefore the function signature is $M(x)$;

- if $L=\lbrace \langle x,y \rangle \vert \dots \rbrace$, elements are $\langle x,y \rangle$’s. Therefore the function signature is $M(\langle x,y \rangle)$.

- $M(x)=\text{yes}$ if $x$ is/satisfies “blab blah blah”

- $M(x)=\text{no}$ otherwise

- The datatype of the parameter to $M$ is the the one of the elements of $L$. E.g.

- If we say “go find an algorithm for language”, it means:

- go find an $M$ such that $L(M) = L$

- go find an $M$ such that $M(x)=\text{yes}$ if $x$ is/satisfies “blab blah blah”

- Language $L = \lbrace x \vert x \text{ is/satisfies blab blah blah} \rbrace$ is a collection of yes-instances.

- Definition:

- We say $L_1 \leq_T L_2$ (“$L_1$ Turing-reduces to $L_2$”) if there is an algorithm that decides $L_1$ using a (hypothetical) algorithm that decides $L_2$.

- Inference:

- CASE 1: implication

- Ground Truth: $L_2$ is decidable

- Goal: To prove $L_1$ is also decidable

- Method: Construct an algorithm $M_1$ for $L_1$ which satisfies $L_1 \leq_T L_2$

- $L_2$ is decidable so there must exist an algorithm $M_2$ for $L_2$

- Call $M_2$ inside $M_1$

- In this way we prove that $L_1 \leq_T L_2$ holds.

- CASE 2: contrapositive

- Ground Truth: $L_1$ is undecidable

- Goal: To prove $L_2$ is also undecidable

- Method: Assume $L_2$ is decidable. Under this assumption, show we can construct an algorithm $M_1$ for $L_1$ which satisfies $L_1 \leq_T L_2$, i.e. $L_1 \leq_T L_2$ holds.

- In this way we imply that $L_1$ is decidable.

- However, we already know that $L_1$ is undecidable.

- Therefore the assumption is invalid.

- CASE 1: implication

- E.g. show that $L_{empty} = \lbrace \langle M \rangle \vert M \text{ is a TM and } L(M) = \emptyset \rbrace$ is undecidable.

- Choose $L_{acc} = \lbrace \langle M,x \rangle \vert M \text{ is a TM that accepts } x \rbrace$ as $L_1$

- $L_{empty}$ is $L_2$.

- Assume there exists an algorithm $M_{empty}$.

- Construct an algorithm $M_{acc}$ using $M_{empty}$:

- Signature: $M_{acc}(\langle M,x \rangle)$

- For every single pair of input $\langle M_i,x_j \rangle$:

- Construct an TM $M_{ij}^{\star}(z) = \lbrace \text{ignore } z; \text{return } M_i(x_j) \rbrace$

- 根据 $L_{acc}$ 的语义,$M_i$ 要么 accept $x_j$,要么 reject

- CASE 1: If $M_i$ accepts $x_j$, $M_i(x_j)=\text{yes}$.

- Therefore $M_{ij}^{\star}(z) = \lbrace \text{ignore } z; \text{return yes} \rbrace$.

- I.e. $M_{ij}^{\star}$ accept every $z$.

- I.e. $M_{ij}^{\star}$ accept everything.

- I.e. $L(M_{ij}^{\star}) = \Omega$ (全集)

- I.e. $\langle M_{ij}^{\star} \rangle \not \in L_{empty}$.

- I.e. $M_{empty}(\langle M_{ij}^{\star} \rangle) = \text{no}$.

- CASE 2: If $M_i$ rejects $x_j$, $M_i(x_j)=\text{no}$.

- Therefore $M_{ij}^{\star}(z) = \lbrace \text{ignore } z; \text{return no} \rbrace$.

- I.e. $M_{ij}^{\star}$ rejects every $z$.

- I.e. $M_{ij}^{\star}$ accept nothing.

- I.e. $L(M_{ij}^{\star}) = \emptyset$

- I.e. $\langle M_{ij}^{\star} \rangle \in L_{empty}$.

- I.e. $M_{empty}(\langle M_{ij}^{\star} \rangle) = \text{yes}$.

- If $M_{empty}(\langle M_{ij}^{\star} \rangle) = \text{yes}$

- I.e. $\langle M_{ij}^{\star} \rangle \in L_{empty}$

- 一路反推到 CASE 2,我们有 $M_i$ rejects $x_j$

- 此时我们的 $M_{acc}(\langle M_i,x_j \rangle)$ 要 return no

- If $M_{empty}(\langle M_{ij}^{\star} \rangle) = \text{no}$

- I.e. $\langle M_{ij}^{\star} \rangle \not \in L_{empty}$

- 一路反推到 CASE 1,我们有 $M_i$ accepts $x_j$

- 此时我们的 $M_{acc}(\langle M_i,x_j \rangle)$ 要 return yes

- Construct an TM $M_{ij}^{\star}(z) = \lbrace \text{ignore } z; \text{return } M_i(x_j) \rbrace$

- Now we showed $L_{acc} \leq_T L_{empty}$. We assumed $L_{empty}$ is decidable, so $L_{acc}$ is also decidable, which is against the truth. Therefore the assumption is invalid and $L_{empty}$ is undecidable.

- 最难的地方在 “Construct an algorithm $M_{acc}$ using $M_{empty}$” 这一步,请结合 $M_{acc}$ 和 $M_{empty}$ 综合考虑。一般的的套路是:

- 根据 $L_1$ 的输入做一个临时变量,然后把这个临时变量当做 $L_2$ 的输入。

- 这个临时变量要满足 $M_2$ 的 signature

- 如果临时变量是一个 TM,比如上面的 $M_{ij}^{\star}$,在处理自身的输入 $z$ 时要见机行事

- 如果能直接 $\text{return }M_i(x_j)$ 或者 $\text{return }!M_i(x_j)$ 无疑是最好的

- 如果不能,考虑 $\text{if } M_i(x_j) = \text{yes}, \text{return } function(z)$ 这样的形式

- 然后根据 $L_2$ 的输出决定 $L_1$ 的输出。

- 根据 $L_1$ 的输入做一个临时变量,然后把这个临时变量当做 $L_2$ 的输入。

// Reduce L_acc to L_empty

M_acc(<M_i,x_j>) {

M*_ij = TurningMachine(z) {

return M_i(x_j)

}

return !M_empty(M*_ij)

}

// General form of reduction algorithm for L_acc

M_acc(<M_i,x_j>) {

M*_ij = TurningMachine(z) {

if(M_i(x_j) = yes) {

return f_1(z)

} else {

return f_2(z)

}

}

return f_3(M_2(M*_ij))

}

Rice’s Theorem (Lecture 4)

Rice’s Theorem. $\lbrace \langle M \rangle \vert L(M) \text{ has property } P \rbrace$ is undecidable if $P$ is non-trivial.

- Non-trivial means:

- $\exists$ $M_Y$ that $L(M_Y)$ has property $P$;

- also $\exists$ $M_N$ that $L(M_N)$ does not has property $P$.

- I.e. “是否有 property $P$” 这个问题并不是永真或者永假

In other words, given encoding of $M$:

- Can’t decide whether $L(M)$ is empty

- Can’t decide whether some special $x \in L(M)$

- Can’t decide whether $L(M)$ is finite

- etc.

Define $L_P = \lbrace \langle M \rangle \vert L(M) \text{ has property } P \rbrace$.

Claim. $L_{acc} \leq_T L_P$.

Proof.

- By “non-trivial”:

- Suppose $\emptyset$ does not have property $P$.

- $\exists$ $M_Y$ that $L(M_Y)$ has property $P$.

- For every single pair of input $\langle M_i,x_j \rangle$, construct an TM $M_{ij}^{\star}(z)$, such that on input $z$

- If $M_i(x_j) = \text{yes}$

- return $M_Y(z)$

- else return No

- If $M_i(x_j) = \text{yes}$

- If $M_{ij}^{\star} \in L_P$, $M_{acc}(\langle M_i,x_j \rangle)$ return Yes; else No

- 逻辑是:

- If $M_i$ accepts $x_j$, $M_{ij}^{\star}$ 等价于 $M_Y$,此时 $M_{ij}^{\star}$ 应该具有 property $P$.

- If $M_i$ rejects $x_j$, $M_{ij}^{\star}$ 等价于 $\emptyset$,此时 $M_{ij}^{\star}$ 应该不具有 property $P$

$\blacksquare$

Kolmogorov Complexity (or, “optimal compression is hard!”) (Lecture 5 & 6) @ Algorithmic Information Theory and Kolmogorov Complexity

Problem:

- 假设 compress $x$ 得到 $y$,decompress $y$ 得到 $x$

- 假设有一个 decompression algorithm $U$ that $U(y)=x$

- $K_U(x) = \min \lbrace \lvert y \rvert \vert U(y)=x \rbrace$

- How small $x$ can be compressed?

- Here $\lvert y \rvert$ denotes the length of a binary string $y$

- In other words, the complexity of $x$ is defined as the length of the shortest description of $x$ if each binary string $y$ is considered as a description of $U(y)$

Optimal decompression algorithm:

- The definition of $K_U$ depends on $U$.

- For the trivial decompression algorithm $U(y) = y$ we have $K_U(x) = \vert x \vert$.

- One can try to find better decompression algorithms, where “better” means “giving smaller complexities”

Definition 1. An algorithm $U$ is asymptotically not worse than an algorithm $V$ if $K_U(x) \leq K_V(x)+C$ for some constant $c$ and for all $x$.

Theorem 1. There exists an decompression algorithm $U$ which is asymptotically not worse than any other algorithm $V$.

Such an algorithm is described as asymptotically optimal.

- The complexity $K_U$ with respect to an asymptotically optimal $U$ is called Kolmogorov complexity.

- Assume that some asymptotically optimal decompression algorithm $U$ is fixed, the Kolmogorov complexity of a string $x$ is denoted by $K(x)$ ($=K_U(x)$).

- The complexity $K(x)$ can be interpreted as the amount of information in $x$ or the “compressed size” of $x$.

The construction of optimal decompression algorithm:

- The idea of the construction is used in the so-called “self-extracting archives”. Assume that we want to send a compressed version of some file to our friend, but we are not sure he has the decompression program. What to do? Of course, we can send the program together with the compressed file. Or we can append the compressed file to the end of the program and get an executable file which will be applied to its own contents during the execution.

- 待补充。

Basic properties of Kolmogorov complexity:

- 待补充。

Algorithmic properties of $K$

- 结合 Berry Paradox 补充

Complexity and incompleteness:

- 不懂

Algorithmic properties of $K$ (continued):

- 不懂

An encodings-free definition of complexity:

- 不懂

Axioms of complexity:

- 不懂

Kolmogorov Complexity (or, “optimal compression is hard!”) (Lecture 5 & 6) @ Wikipedia

Definition

If $P$ is a program which outputs a string $x$, then $P$ is a description of $x$. The length of the description is just the length of $P$ as a character string, multiplied by the number of bits in a character (e.g. 7 for ASCII).

We could, alternatively, choose an encoding for Turing machines $\langle M \rangle$. If $M$ is a Turing Machine which, on input $w$, outputs string $x$, then the concatenated string $\langle M \rangle w$ is a description of $x$.

Any string $s$ has at least one description, namely the program:

function GenerateFixedString()

return s

If a description of $s$, $d(s)$, is of minimal length (i.e. it uses the fewest bits), it is called a minimal description of $s$. Thus, the length of $d(s)$ (i.e. the number of bits in the description) is the Kolmogorov complexity of $s$, written $K(s)$. Symbolically, $K(s) = \vert d(s) \vert$.

Invariance theorem

Informal treatment

Theorem. Given any description language $L$, the optimal description language is at least as efficient as $L$, with some constant overhead.

Proof: Any description $D$ in $L$ can be converted into a description in the optimal language by first describing $L$ as a computer program $P$ (part 1), and then using the original description $D$ as input to that program (part 2). The total length of this new description $D’$ is (approximately): $\vert D’ \vert = \vert P \vert + \vert D \vert$.

The length of $P$ is a constant that doesn’t depend on $D$. So, there is at most a constant overhead, regardless of the object described. Therefore, the optimal language is universal up to this additive constant. $\blacksquare$

A more formal treatment

Theorem. If $K_1$ and $K_2$ are the complexity functions relative to Turing complete description languages $L_1$ and $L_2$, then there is a constant $c$ – which depends only on the languages $L_1$ and $L_2$ chosen – such that

\[\forall s, -c \leq K_1(s) - K_2(s) \leq c.\]Proof: By symmetry, it suffices to prove that there is some constant $c$ such that for all strings $s$, $K_1(s) \leq K_2(s) + c$.

Now, suppose there is a program in the language $L_1$ which acts as an interpreter for $L_2$:

function InterpretL2(string p)

return p()

Running InterpretL2 on input p returns the result of running p.

Thus, if $P$ is a program in $L_2$ which is a minimal description of $s$, then InterpretL2(P) returns the string $s$. The length of this description of $s$ is the sum of

- The length of the program

InterpretL2, which we can take to be the constant $c$. - The length of $P$ which by definition $=K_2(s)$.

This proves the desired upper bound. $\blacksquare$

Basic results

In the following discussion, let $K(s)$ be the complexity of the string $s$.

Theorem. There is a constant $c$ such that $\forall s, K(s) \leq \vert s \vert + c$.

Theorem. There exist strings of arbitrarily large Kolmogorov complexity. Formally: for each $n \in \mathbb{N}$, there is a string $s$ with $K(s) \geq n$.

Proof: Otherwise all of the infinitely many possible finite strings could be generated by the finitely many programs with a complexity below $n$ bits. $\blacksquare$

Theorem. $K$ is not a computable function. In other words, there is no program which takes a string $s$ as input and produces the integer $K(s)$ as output.

Chain rule for Kolmogorov complexity:

\[K(X,Y) = K(X) + K(Y \vert X) + O(\log(K(X,Y)))\]It states that the shortest program that reproduces $x$ and $Y$ is no more than a logarithmic term larger than a program to reproduce $x$ and a program to reproduce $Y$ given $X$. Using this statement, one can define an analogue of mutual information for Kolmogorov complexity.

Compression

A string $s$ is compressible by a number $c$ if it has a description whose length does not exceed $\vert s \vert −c$ bits. This is equivalent to saying that $K(s) \leq \vert s \vert −c$. Otherwise, $s$ is incompressible by $c$.

A string incompressible by 1 is said to be simply incompressible––by the pigeonhole principle, which applies because every compressed string maps to only one uncompressed string, incompressible strings must exist, since there are $2^n$ bit strings of length n, but only $2^n - 1$ shorter strings.

There are $2^n$ bitstrings of length $n$. The number of descriptions of length not exceeding $n-c$ is given by the geometric series:

\[1 + 2 + 2^2 + ... + 2^{n-c} = 2^{n-c+1} - 1\]There remain at least $2^n - 2^{n-c+1} + 1$ bitstrings of length $n$ that are incompressible by $c$. To determine the probability, divide by $2^n$.

Chaitin’s incompleteness theorem

We know that, in the set of all possible strings, most strings are complex in the sense that they cannot be described in any significantly “compressed” way. However, it turns out that the fact that a specific string is complex cannot be formally proven, if the complexity of the string is above a certain threshold.

Theorem. There exists a constant $L$ (which only depends on the particular axiomatic system and the choice of description language) such that there does not exist a string $s$ for which the statement

\[K(s) \geq L \text{(as formalized in } S \text{)}\]can be proven within the axiomatic system $s$.

Proof by contradiction using Berry’s paradox. 略

Time/space complexity classes: P & NP @ class (Lecture 7)

Resource bounds:

- $M$ has (worst case) running time $T$ if $\forall$ string $x$, $M$ halts after at most $T(\vert x \vert)$ steps.

- $M$ has (worst case) space usage $s$ if $\forall$ string $x$, $M$ writes on at most $S(\vert x \vert)$ tape cells.

Basic Complexity Class:

- $\text{DTIME}(f(n)) = \lbrace L \vert L \text{ decided by a (deterministic) TM with running time } O(f(n)) \rbrace$

- $\text{D}$ for “deterministic”

- Formally, $L \in TIME(f(n))$ if there is a TM $M$ and a constant $c$ such that

- $M$ decides $L$, and

- $M$ runs in time $c \cdot f$;

- i.e., for all $x$ (of length at least 1), $M(x)$ halts in at most $c \cdot f(\lvert x \rvert)$ steps.

- E.g. $\text{DTIME}(n^2) = \text{set of all problems that can be solved in quadratic time}$

- $\text{DSPACE}(f(n)) = \lbrace L \vert L \text{ decided by a TM that uses spaces } O(f(n)) \rbrace$

- Language / decision problem = set of strings (yes-instances)

- Complexity class = set of languages

Standard Complexity Classes:

- $\text{P} = \lbrace \text{problems that can be solved (by a deterministical TM) in poly time} \rbrace$

- $\text{P} = \bigcup_{k=1,2,\dots} \text{DTIME}(n^k)$

- 如果把 $P$ 看做 property 的话,$P$ 可以简单描述为 “deterministic poly time”

- “deterministic” = “deterministically solvable in time” = “solvable in time by a deterministical TM”

- $\text{P} = \bigcup_{k=1,2,\dots} \text{DTIME}(n^k)$

- $\text{PSPACE} = \lbrace \text{problems that can be solved using poly space} \rbrace$ $\text{PSPACE} = \bigcup_{k=1,2,\dots} \text{DSPACE}(n^k)$

- $\text{EXP} = \lbrace \text{problems that can be solved by in exponential time} \rbrace$

- $\text{EXP} = \bigcup_{k=1,2,\dots} \text{DTIME}(2^{n^k})$

- $\text{L} = \lbrace \text{problems that can be solved using log space} \rbrace$

- $\text{L} = \text{DSPACE}(\log n)$

Translating from “Complexity Theory Speak”:

- Is $X \in \text{PSPACE}$?

- Can problem $x$ be solved using polynomial space?

- Is $\text{PSPACE} \subseteq P$?

- Can every problem solvable in polynomial space also be solved in polynomial time?

- This is true: $P \subseteq \text{PSPACE}$

Relationships between Complexity Classes:

- $\forall f(n), \text{DTIME}(f(n)) \subseteq \text{DSPACE}(f(n))$

- This follows from the observation that a TM cannot write on more than a constant number of cells per move.

- If $f(n) = O(g(n))$, then $\text{DTIME}(f(n)) \subseteq \text{DTIME}(g(n))$

Complementation @ Complement Classes and the Polynomial Time Hierarchy:

- 这里先声明下,全集可以表示为 $\Omega$、$\sum^{\star}$ 或者 $\lbrace 0,1 \rbrace^{\star}$

- The complement of a decision problem $\mathcal{L}$, denoted $co\mathcal{L}$, is the set of “No”-instances of $\mathcal{L}$.

- 一般来说,我们可以认为 $co\mathcal{L} = \overline{\mathcal{L}} = \Omega \setminus \mathcal{L}$

- 严格来说,$\mathcal{L} \cup co\mathcal{L} = WF_{\mathcal{L}} \subseteq \Omega$ where $WF_{\mathcal{L}}$ is the set of well-formed strings describing “Yes” and “No” instances. That is, $co\mathcal{L} = WF_{\mathcal{L}} − \mathcal{L}$.

- 只是通常会限定 $WF_{\mathcal{L}} = \Omega$

- 举个例子:Every positive integer $x>1$ is either composite (合数) or prime (质数)

- 如果限定 $\Omega = WF_{\mathcal{L}} = \lbrace x \vert x \text{ is a positive integer and } x > 1 \rbrace$

- $\mathcal{L} = \lbrace x \vert x \text{ is prime } \rbrace$

- $co\mathcal{L} = \overline{\mathcal{L}} = \lbrace x \vert x \text{ is not prime }\rbrace = \lbrace x \vert x \text{ is composite }\rbrace$

- 如果仅限定 $\Omega = WF_{\mathcal{L}} = \lbrace x \vert x \text{ is a positive integer }\rbrace$

- $co\mathcal{L} = \overline{\mathcal{L}} = \lbrace x \vert x \text{ is not prime }\rbrace \neq \lbrace x \vert x \text{ is composite }\rbrace$

- 因为 1 既不是 prime 也不是 composite

- 如果限定 $\Omega = WF_{\mathcal{L}} = \lbrace x \vert x \text{ is a positive integer and } x > 1 \rbrace$

- The complement of a complexity class is the set of complements of languages in the class.

- $\mathcal{C} = \lbrace \mathcal{L} \vert \mathcal{L} \text{ has complexity } \mathcal{C} \rbrace$

- $co\mathcal{C} = \lbrace co\mathcal{L} \vert \mathcal{L} \in \mathcal{C} \rbrace$

- 注意 $co\mathcal{C}$ 和 $\overline{\mathcal{C}}$ 没有半毛钱的关系

- $\overline{\mathcal{C}} = \lbrace \mathcal{L} \vert \mathcal{L} \text{ does NOT have complexity } \mathcal{C} \rbrace$

- $co\mathcal{C} = \lbrace \mathcal{L} \vert \mathcal{L} \text{‘s complement has complexity } \mathcal{C} \rbrace$

- $\mathcal{L} \text{‘s complement has complexity } \mathcal{C}$ 并不能说明 $\mathcal{L} \text{ has } \mathcal{C} \text{ or not}$

- Theorem 1. $\mathcal{C}_1 \subseteq \mathcal{C}_2 \hspace{1em} \Rightarrow \hspace{1em} co\mathcal{C}_1 \subseteq co\mathcal{C}_2$

- Theorem 2. $\mathcal{C}_1 = \mathcal{C}_2 \hspace{1em} \Rightarrow \hspace{1em} co\mathcal{C}_1 = co\mathcal{C}_2$

- Closure under complementation means: $\mathcal{C} = co\mathcal{C}$

- We say such class $\mathcal{C}$ are “closed under complementation”.

- Theorem 3. If $\mathcal{C}$ is a deterministic time or space complexity class, then $\mathcal{C} = co\mathcal{C}$.

- E.g.

- $co\text{TIME}(f(n)) = TIME(f(n))$

- $coP = P$

- 翻译一下:如果 $\mathcal{L}$ can be solved by in poly time $\Rightarrow$ $co\mathcal{L}$ can also be solved by in poly time

- vise versa

- $co\text{PSPACE} = \text{PSPACE}$

- Why? For any class $\mathcal{C}$ defined by a deterministic TM $M$, just switch accpet/reject behavior and you get a TM $coM$ that decide $co\mathcal{C}$

- i.e. $M(\mathcal{L}) = \text{yes} \hspace{1em} \Rightarrow \hspace{1em} coM(co\mathcal{L}) = \text{yes}$, in the same bound of time or space.

- E.g.

$NP$:

- $NP$ 可以描述为 nondeterministic polynomial time

- “nondeterministic” = “nondeterministically solvable in time” = “solvable in time by a nondeterministical TM”

- Definition 1. A problem is assigned to the $NP$ class if it is solvable in polynomial time by a nondeterministic TM.

- $NP = \lbrace \text{problems that can be solved by a nondeterministic TM in poly time} \rbrace$

- Definition 2. $NP = \text{set of decision problems } L$, where

- $L = \lbrace x \vert \exists w : M(x,w) = 1 \rbrace$

- $M$ halts in polynomial time, as a function of $\lvert x \rvert$ alone.

- $x$: instance

- $w$: proof / witness / solution

- 简单理解就是,我们没有办法直接确定是否有 $x \in L$ (nondeterministic),只能通过 $(x,w)$ 是否满足 $L$ 的条件来判断这个 $x$ 是否有 $\in L$

- 举例待补充

$co\text{NP}$

- Definition. $co\text{NP} = \text{set of decision problems } L$, where

- $L = \lbrace x \vert \not\exists w : M(x,w) = 1 \rbrace$

- 等价于 $L = \lbrace x \vert \forall w : M(x,w) = 0 \rbrace$

- 等价于 $L = \lbrace x \vert \forall w : M(x,w) = 1 \rbrace$

- $M$ halts in polynomial time, as a function of $\lvert x \rvert$ alone.

- 举例待补充

$P$ vs $NP$ vs $co\text{NP}$

Theorem 1. $P \subseteq NP$

Proof: Take any $L \in P$, then $\exists$ a PTM $M$ such that $L = \lbrace x \vert M(x) = 1 \rbrace$.

Define $M’(x,w) = \lbrace \text{ignore } w; \text{return } M(x) \rbrace$. Therefore $L=\lbrace x \vert \exists w : M,(x,w) = 1 \rbrace \in NP$ $\blacksquare$

Theorem 2. $P \subseteq co\text{NP}$

Proof: Ditto. $\blacksquare$

Closure Properties of $NP$, $co\text{NP}$

- If $A, B \in \text{NP}$, then

- $A \cap B \in \text{NP}$

- $A \cup B \in \text{NP}$

- If $A, B \in co\text{NP}$, then

- $A \cap B \in co\text{NP}$

- $A \cup B \in co\text{NP}$

Karp reductions, NP hardness/completeness (Lecture 8)

Karp Reduction: Define $A \leq_P B$ (“$A$ Karp-reduces to $B$”) to mean:

\[\exists \text{ poly time function } f: \quad \forall x : x \in A \iff f(x) \in B\]$\leq_P$ is transive: $A \leq_P B \leq_P C \Rightarrow A \leq_P C$.

$L$ is $\text{NP}$-hard if $\forall A \in \text{NP} : A \leq_P L$, which means:

- $L$ is at least as hard as EVERY problem in $\text{NP}$

- If you could solve $L$ in poly-time then $\text{P} = \text{NP}$

$L$ is $\text{NP}$-compelte if:

- $L$ is $\text{NP}$-hard

- $L \in \text{NP}$

which means:

- $L$ is (one of) the hardest problem in $\text{NP}$

- It’s impossible to prove $\text{P} = \text{NP}$ by solving $L$ in poly-time, because $L \in \text{NP}$

Theorem. If $A \leq_P B$ and $A$ is $\text{NP}$-hard, then $B$ is $\text{NP}$-hard.

Proof: $\forall L \in \text{NP} : L \leq_P A \leq_P B \Rightarrow L \leq_P B$ $\blacksquare$

- If $A \leq_P B$ and $B \in \text{P}$ $\Rightarrow A \in \text{P}$

- If $A \leq_P B$ and $B \in \text{NP}$ $\Rightarrow A \in \text{NP}$

- Suppose $A$ is $\text{NP}$-compelte; then $A \in P \iff \text{P} = \text{NP}$

“Canonical” NP-complete Problem $X=\lbrace \langle M,x,T \rangle \vert \exists w : M \text{ accepts } (x,w) \text{ in } \vert T \vert \text{ steps} \rbrace$.

Claim. $X \in \text{NP}$

Proof: Can be checked in poly time as a function of length of $M,x,T$ (by universal TM). $\blacksquare$

Claim. $\forall A \in \text{NP}, A \leq_P X$

Proof: $A \in \text{NP}$, so $A = \lbrace x \vert \exists w : M_A(x,w) = 1 \rbrace$ where the running time of $M_A$ is $p(\vert x \vert)$.

Define $1^{p(\vert x \vert)}$ a string of $p(\vert x \vert)$ ones. Consider $f(x)=\langle M_A,x,1^{p(\vert x \vert)}\rangle$.

$\forall x \in A : f(x) \in X$. Therefore $A \leq_P X$. $\blacksquare$

Cook-Levin Theorem & Natural NP-complete problems (Lecture 9 & 10)

Cook-Levin Theorem. $SAT = \lbrace \varphi \vert \varphi \text{ is a satisfiable boolean formula} \rbrace$ is NP-complete

How to $SAT \leq_P 3SAT$:

- For a long clause in $SAT$, say $x_1 \vee x_3 \vee \overline{x_4} \vee x_2 \vee x_5$,

- Introduce $s$ and break it into $(x_1 \vee x_3 \vee s) \wedge (\overline{s} \vee \overline{x_4} \vee x_2 \vee x_5)$.

- If $s$ is TRUE, then $\overline{x_4} \vee x_2 \vee x_5$ must be TRUE

- If $s$ is FALSE, then $x_1 \vee x_3$ must be TRUE

- which means, one of $x_1 \vee x_3$ and $\overline{x_4} \vee x_2 \vee x_5$ must be true

- Further break into: $(x_1 \vee x_3 \vee s) \wedge (\overline{s} \vee \overline{x_4} \vee t) \wedge (\overline{t} \vee x_2 \vee x_5)$

NP in terms of nondeterministic computation (Lecture 11)

$NP = \lbrace \text{problems that can be solved by a nondeterministic TM in poly time} \rbrace$.

Nondeterministic TM:

- In state $q$, reading char $c$, transition $\delta(q,c)$ is a set of possible / legal actions.

- Thus we can draw a graph of configurations for a Nondet TM on a given input $x$.

- 注意 configuration graph 不是 general 的,不是针对所有的 input $x$ 的;而是对每一个特定的 $x$,我们都可以画一个 configuration graph

- configuration graph 也可以称为 computation tree

- Nondeterministic TM is just imaginary, not a realistic model for computation. Just convenient for computational theory.

Nondeterministic TM acceptance:

- 在 configuration tree 中,每一个 internal node(非 leaf) 都是一个 configuration

- A configuration can have many outgoing edges in the configuration graph.

- 我们称每个可以走的 outgoing edge 为一个 choice

- Define computation thread on $x$: a path start at $(q_{\text{start}}, x, \cdot)$

- 所以每个 computation thread 都可以看做一个 sequence of choices

- Define accepting thread: a computation thread eventually reaches $(q_{\text{accept}}, \cdot, \cdot)$

- NTM accepts $x$ $\iff \exists$ an accepting thread on $x$

- NTM rejects $x$ $\iff \not \exists$ an accepting thread on $x$ $\iff$ all threads rejects $x$

- Running time of NTM on $x$: max $\text{#}$ of steps among all threads

- Space usage of NTM on $x$: max tape usage among all threads

Pitfalls of NTM:

- Nondeterministic “algorithm” defined in terms of local behavior within each thread

- Acceptance/rejection defined in terms of global/collective behavior of all threads

- Threads can’t “communicate” with each other

$NTIME$, $NSPACE$ & $NP$

- $\text{NTIME}(f(n)) = \lbrace L \vert L \text{ accepted by a NTM with running time } O(f(n)) \rbrace$

- $\text{NSPACE}(f(n)) = \lbrace L \vert L \text{ accepted by a NTM that uses spaces } O(f(n)) \rbrace$

- $\text{NP} = \bigcup_{k=1,2,\dots} \text{NTIME}(n^k)$

Claim: for $\text{NP}$, the witness-checking definition and the NTM definition are equivalent.

Proof: 略

Hierarchy theorems (Lecture 12 & 13)

Complexity Separations–the holy grail of computational complexity:

- Containments: $P \subseteq NP, NP \subseteq PSPACE, DTIME(n) \subseteq DSPACE(n)$, etc

- Separations: $P \neq NP?, P \neq PSPACE?, NP \neq coNP$?

Deterministic Hierarchy:

- $\text{DTIME}(n) \subsetneq \text{DTIME}(n^2) \text{DTIME}(n^3) \dots$

- $\text{DSPACE}(n) \subsetneq \text{DSPACE}(n^2) \text{DSPACE}(n^3) \dots$

How to prove $\text{DTIME}(n) \neq \text{DTIME}(n^2)$?

- Better Diagonalization

- 略

Deterministic Time Hierarchy Theorem:

- $f(n) \log f(n) = o(g(n)) \Rightarrow \text{DTIME}(f(n)) \subsetneq \text{DTIME}(g(n))$

- Thus, $\text{P} \subsetneq \text{EXP}$

Deterministic Space Hierarchy Theorem:

- Ditto

- Thus, $\text{L} \subsetneq \text{PSPACE}$

Non-deterministic Hierarchy:

- $\text{NTIME}(n) \subsetneq \text{NTIME}(n^2) \subsetneq \text{NTIME}(n^3) \dots$

- $\text{NSPACE}(n) \subsetneq \text{NSPACE}(n^2) \subsetneq \text{NSPACE}(n^3) \dots$

How to prove $\text{NTIME}(n) \neq \text{NTIME}(n^2)$?

- Delayed/lazy Diagonalization

- 略

Non-deterministic Time Hierarchy Theorem:

- $f(n+1) = o(g(n)) \Rightarrow \text{NTIME}(f(n)) \subsetneq \text{NTIME}(g(n))$

- Thus, $\text{NP} \subsetneq \text{NEXP}$

Non-deterministic Space Hierarchy Theorem:

- Ditto

- Thus, $\text{NL} \subsetneq \text{NPSPACE}$

Ladner’s theorem & NP-intermediate problems (Lecture 14)

Questions:

- Most natural problems are in $P$ or $NP$-complete.

- Any problem between $P$ and $NP$-complete?

Ladner’s theorem:

- If $\text{P} \neq \text{NP}$ then we can construct $M^{\ast}$ such that:

- $L(M^{\ast}) \in \text{NP}$

- $L(M^{\ast}) \notin \text{P}$

- $L(M^{\ast}) \notin \text{NP}$-complete

Claim: all finite languages are in $P$.

Proof: A finite language is a finite list of all yes-instances. Build an algorithm to compare input to this list and running time is bounded. $\blacksquare$

Claim: if $L = 3SAT \setminus A$ where $A$ is finite, then $L$ is $NP$-complete.

Proof: 注意这里 $3SAT \setminus A$ 并不是表示 “infinite $3SAT$”,只是简单的表示 “$3SAT$ 抠掉了一个 finite 集合”,所以也称为 “finite modification of $3SAT$” (这里 modification 就是 exclusion 的意思)。

$3SAT \leq_P L$:

- If $x \in A$ (比方说 $A$ 只有 $x_1,x_2,x_3$ 三个 literal 并只有两个 clause), convert $x$ to an instance in $L$

- If $x \notin A$, $x \in L$ by definition.

$\blacksquare$

Ladner’s theorem 证明略

Polynomial hierarchy (Lecture 15)

Question:

- $\text{NP}$ problem: $\lbrace x \vert \exists w : M(x, w) = 1 \rbrace$

- $co\text{NP}$ problem: $\lbrace x \vert \forall w : M(x, w) = 1 \rbrace$

- $M$ runs in poly($\lvert x \rvert$) time

- What about problems like $\lbrace x \vert \exists w_1 \forall w_2: M(x, w_1, w_2) =1 \rbrace$?

Polynomial Hierarchy:

- Define $\Sigma_k$ problem: $\lbrace x \vert \exists w_1 \forall w_2 \cdots \square w_k: M(x, w_1, w_2, \dots, w_k)=1 \rbrace$

- Define $\Pi_k$ problem: $\lbrace x \vert \forall w_1 \exists w_2 \cdots \square w_k: M(x, w_1, w_2, \dots, w_k)=1 \rbrace$

- Generally, 这里的 $M$ 应该是一个 Nondet TM

- $M$ runs in poly($\lvert x \rvert$) time

- E.g.

- $\Sigma_0 = \text{P}$

- $\Sigma_1 = \text{NP}$

- $\Pi_0 = co\text{P} = \text{P}$

- $\Pi_1 = co\text{NP}$

- $\Pi_k = co\Sigma_k$

- $\text{#}$ of quantifiers doesn’t matter. E.g. $L = \lbrace x \vert \forall w_1 \forall w_2 \exists w_3 \exists w_4 \exists w_5: M(x, w_1, w_2, w_3, w_4, w_5) =1 \rbrace$

- Obviously, $L \in \Pi_5$

- But also $L = \lbrace x \vert \forall (w_1,w_2) \exists (w_3, w_4, w_5): M(x, \vec{w})=1 \rbrace$, so $L \in \Pi_2$

Properties (for all $k$):

- $\Sigma_k \subseteq \Sigma_{k+1} \cap \Pi_{k+1}$

- $\Sigma_k \subseteq \text{PSPACE}$

- $\Sigma_k = co\Pi_k$

- $\Pi_k \subseteq \Sigma_{k+1} \cap \Pi_{k+1}$

- $\Pi_k \subseteq \text{PSPACE}$

- $\Pi_k = co\Sigma_k$

Proof of $\Sigma_k \subseteq \Sigma_{k+1} \cap \Pi_{k+1}$:

Suppose $L = \lbrace x \vert \exists w_1 \forall w_2 \cdots \exists w_k: M(x, \vec{w})=1 \rbrace \in \Sigma_k$.

Then $L = \lbrace x \vert \exists w_1 \forall w_2 \cdots \exists w_k \forall w_{junk}: M(x, \vec{w})=1 \rbrace \in \Sigma_{k+1}$ and $L = \lbrace x \vert \forall w_{junk} \exists w_1 \forall w_2 \cdots \exists w_k: M(x, \vec{w})=1 \rbrace \in \Pi_{k+1}$ $\blacksquare$

Polynomial Hierarchy:

- Define $\text{PH} = \bigcup_{k=0,1,\dots} \Sigma_k = \bigcup_{k=0,1,\dots} \Pi_k$

- Conjecture: for all $k > 0$: $\Sigma_k \neq \Pi_k$.

- Since $\Sigma_k, \Pi_k \in \text{PSPACE}$ for all $k > 0$, we have $\text{PH} \in \text{PSPACE}$. Each $\Sigma_k$ or $\Pi_k$ is called a level in the hierarchy.

Theorem: If $\Sigma_k = \Pi_k$ for some $k$, then $\text{PH} = \Sigma_k$ (denoted as “$\text{PH}$ collapses to $k$”).

Proof: (1) If $\Sigma_k = \Pi_k$, then $\Sigma_k = \Sigma_{k+1}$.

Take any $L \in \Sigma_{k+1}$.

$L = \lbrace x \vert \exists w_1 \forall w_2 \cdots \square w_{k+1}: M(x, \vec{w})=1 \rbrace$. $M$ runs in poly time.

Using the same $M$, define $L’ = \lbrace (x,w_1) \vert \forall w_2 \exists w_3 \cdots \square w_{k+1}: M(x, \vec{w})=1 \rbrace$. Therefore $L’ \in \Pi_{k}$.

Since $\Sigma_k = \Pi_k$, $L’$ can be rewritten as $L’ = \lbrace (x,w_1) \vert \exists w_2’ \forall w_3’ \cdots \square w_{k+1}’: M(x, w_1 \vec{w}’)=1 \rbrace$.

Reconstruct $L$ using $L’$:

\[\begin{align} L &= \lbrace x \vert \exists w_1 \exists w_2' \forall w_3' \cdots \square w_{k+1}': M(x, w_1, \vec{w}')=1 \rbrace \newline &= \lbrace x \vert \exists (w_1,w_2') \forall w_3' \cdots \square w_{k+1}': M(x, w_1, \vec{w}')=1 \rbrace \in \Sigma_k \end{align}\](2) If $\Sigma_k = \Pi_k = \Sigma_{k+1}$, then $\Sigma_{k+1} = \Sigma_k = co\Pi_k = co\Sigma_{k+1} = \Pi_{k+1}$ $\blacksquare$

Oracle computations and a characterization of PH (Lecture 16 & 17)

Let $A$ be some decision problem and $M$ be a class of Turing machines. Then $M^A$ is defined to be the class of machines obtained from $M$ by allowing instances of $A$ to be solved in one step.

- We call the “one-step” (not necessarily $O(1)$, but a guaranteed time bound) algorithm to $A$ an oracle.

- E.g. we can declare an oracle to solve $3SAT$ in poly time.

Similarly, if $M$ is a class of Turing machines and $C$ is a complexity class, then $M^C = \bigcup_{A \in C} M^A$. If $L$ is a complete problem for $C$, and the machines in $M$ are powerful enough to compute polynomial-time computations, then $M^C = M^L$.

Oracle complexity classes:

- Let $A$ be a decision problem, then

- $\text{P}^A = \lbrace \text{decision problems solvable by a poly-time } A\text{-OTM} \rbrace$

- $\text{P}^A = \lbrace L(M^A) \vert M \text{ is a poly-time OTM} \rbrace$

- $\text{NP}^A = \lbrace \text{decision problems solvable by a non-det poly-time } A\text{-OTM} \rbrace$

- $\text{P}^A = \lbrace \text{decision problems solvable by a poly-time } A\text{-OTM} \rbrace$

- Let $\text{C}$ be a complexity class, then

- $\text{P}^\text{C} = \bigcup_{L \in \text{C}} \text{P}^L$

- $\text{P}^\text{C} = \lbrace \text{problems solvable by a poly-time OTM with some problem from C as its oracle} \rbrace$

- $\text{NP}^C = \bigcup_{L \in \text{C}} \text{NP}^L$

- Differenet threads of an $\text{NP}$ computation can ask different oracle queries for $L \in \text{C}$

- $\text{P}^\text{C} = \bigcup_{L \in \text{C}} \text{P}^L$

Note: Overloaded Notation!

- $M^L$: special TM $M$ with oracle for a specific decision problem $L$

- $\text{NP}^L$: complexity class $\text{NP}$ with oracle for a specific decision problem $L$

- $\text{NP}^\text{C}$: complexity class $\text{NP}$ with oracle for any decision problem $\in \text{C}$

Claim: If $L$ is a $\text{C}$-complete problem, then $\text{P}^L = \text{P}^\text{C}, \text{NP}^L = \text{NP}^\text{C}$ etc.

Proof: By Karp reduction.

注意,我们的确是有 $\text{P}^\text{C} = \bigcup_{L \in \text{C}} \text{P}^L$,但是我们不要求一定要 $\exists L$ 是 $\text{C}$-complete 的。 $\blacksquare$

- $\text{P}^{\text{P}} = \text{P}$

- $\text{NP}^{\text{P}} = \text{NP}$

- $\text{P}^{\text{NP}} = \text{P}^{co\text{NP}}$

- $co\text{NP} \subseteq \text{P}^{\text{NP}}$

$\text{PH}$ new characterization:

- $\Sigma_0 = \Pi_0 = \text{P}$

- $\Sigma_k = \text{NP}^{\Sigma_{k-1}} = \text{NP}^{\Pi_{k-1}}$

- $\Sigma_0 = \text{P}$

- $\Sigma_1 = \text{NP}^{\text{P}} = \text{NP}$

- $\Sigma_2 = \text{NP}^{\text{NP}}$

- $\Sigma_3 = \text{P}^{\text{NP}^{\text{NP}}}$

- $\Pi_{k} = co\Sigma_k$

Claim: $\Sigma_k = \text{NP}^{\Pi_{k-1}}$

Proof: By induction on $k$.

(1) [$\Sigma_k \subseteq \text{NP}^{\Pi_{k-1}}$]

Take any $L \in \Sigma_{k}$, so $L = \lbrace x \vert \exists w_1 \forall w_2 \cdots \square w_{k}: M(x, \vec{w})=1 \rbrace$. $M$ runs in poly time.

Goal: show $x \in L \Rightarrow$ $x$ is accepted by a $\text{NP}$TM $M^{\ast}$ with $\Pi_{k-1}$ oracle, i.e. $x \in L(M^{\ast})$.

Using the same $M$, define $L’ = \lbrace (x,w_1) \vert \forall w_2 \exists w_3 \cdots \square w_{k}: M(x, \vec{w})=1 \rbrace$. Therefore $L’ \in \Pi_{k-1}$.

Choose $L’$ as my oracle. Define $M^{\ast}(x)$:

- Non-deterministically guess $w_1$

- 不同的 guess of $w_1$ 对应不同的一条 computation thread

- Call oracle to see $(x,w_1) \stackrel{?}{\in} L’$.

- Accept $x$ if oracle says yes.

- 相当于我们穷举了所有 $w_1$ 的取值,并 query $(x,w_1) \stackrel{?}{\in} L’$

- 如果有某个 query 返回了 YES,说明 $\exists$ an accepting thread $\Rightarrow$ NTM accepts $x$

- 如果所有的 query 都返回 NO,说明 $\not \exists$ an accepting thread $\Rightarrow$ NTM rejects $x$

(2) [$\Sigma_k \supseteq \text{NP}^{\Pi_{k-1}}$]

Suppose there is a $\text{NP}$TM $M^{\ast}$ using $L’ = \lbrace x \vert \forall w_2 \exists w_3 \cdots \square w_{k}: M’(x, \vec{w})=1 \rbrace \in \Pi_{k-1}$ as its oracle.

Goal: show $x \in L(M^{\ast}) \Rightarrow$ $\exists w_1: (x, w_1) \in L’’$ for some $\Pi_{k-1}$ set $L’’$.

For simplicity, assume that each thread of $M^{\ast}$ makes only 1 query to the oracle. Define an existentially quantified variable $y_1$ that include all computation threads of $M^{\ast}$.

Suppose on input $x$, $\exists$ an accepting thread of $M^{\ast}$, say $y$, that makes a query $q \stackrel{?}{\in} L’$.

CASE 1: on accepting thread $y$, oracle answer is YES.

$x \in L(M^{\ast}) \Rightarrow$ $\exists y_1: (x, y_1) \in L’$ (i.e. 我们的 $L’’$ 直接取 $L’$ 就好了).

CASE 2: on accepting thread $y$, oracle answer is NO.

$x \in L(M^{\ast}) \Rightarrow$ $\exists y_1: (x, y_1) \in coL’$

Because $coL’ \in \Sigma_{k-1}$, $\lbrace x \vert \exists y_1: (x, y_1) \in coL’ \rbrace \in \Sigma_{k-1}$ (合并 $\exists y_1$ 和 $\exists w_2$). Based on the fact that $\Sigma_{k-1} \subseteq \Sigma_{k}$, we can still conclude that $\lbrace x \vert \exists y_1: (x, y_1) \in coL’ \rbrace \in \Sigma_{k}$. $\blacksquare$

Space complexity (in terms of configuration graphs) (Lecture 18)

- $\text{PSPACE} = \bigcup_{k=1,2,\dots} \text{DSPACE}(n^k)$

- $\text{NPSPACE} = \bigcup_{k=1,2,\dots} \text{NSPACE}(n^k)$

- $\text{L} = \text{DSPACE}(\log n)$

- $\text{NL} = \text{NSPACE}(\log n)$

Configuration graphs:

- Two tapes:

- Read-only input tape

- Read-write work tape (calculate space usage on this tape)

- Transition: $(q, w, i, j) \rightarrow (q’, w’, i’, j’)$

- $q$: state

- $w$: content of work tape

- Here, $\lvert w \rvert \leq$ space usage of the TM

- $i$: input tape head

- $j$: work tape head

- Possible $\text{#}$ of configurations

- Suppose $M$ uses $f(n)$ space on input of length $n$

- $C_M(n) \leq \lvert Q \rvert \cdot \lvert \Gamma \rvert \cdot n \cdot f(n) = 2^{O(f(n))}$, as long as $f(n) \geq \log n$

- Note that since the input is fixed and the input tape is read-only, we do not need to consider all possible length-$n$ strings that can be written on the input tape.

Inclusions

- We alread had $\text{DTIME}(f(n)) \subseteq \text{DSPACE}(f(n))$

- $\text{DSPACE}(f(n)) \subseteq \text{DTIME}(2^{O(f(n))})$

- $\text{NSPACE}(f(n)) \subseteq \text{NTIME}(2^{O(f(n))})$

Proof:

Let $L \in \text{DSPACE}(f(n))$. Then there is a TM $M$ that decides $L$ using $f(n)$ space. Show there is a TM $M’$ that decides $L$ in $2^{O(f(n))}$ time.

Construct $M’$ this way:

M'(x):

run (orignal) M on x for C_M(n) steps

if M accepted x

return YES

else

return NO

- $M’$ runs in $C_M(n)$ time

- If $M$ hasn’t halted in $C_M(n)$ steps, it must be in an infinite loop

$\blacksquare$