Digest of Advanced R (亟待更新)

P.S. It was 2015 when the 1st edition of this book came out and I wrote this post. Now Hadley Wickham is working on the 2nd edition where a lot contents changed.

TODO: Update or delete this post.

Part I. Foundations

1. Data structures

| Homogeneous | Heterogeneous | |

|---|---|---|

| 1-d | Atomic vector | List |

| 2-d | Matrix | Data frame |

| n-d | Array |

Note that R has no 0-dimensional, or scalar types. Individual numbers or strings, which you might think would be scalars, are actually vectors of length one.

1.1 Quiz

Q: What are the three properties of a vector, other than its contents?

- The three properties of a vector

xaretypeof(x),length(x), andattributes(x).

Q: What are the four common types of atomic vectors? What are the two rare types?

- The four common types of atomic vector are logical, integer, double (sometimes called numeric), and character. The two rarer types are complex and raw.

- atomic 本身并没有指特定的某种类型,你理解为 primitive 就好了

Q: What are attributes? How do you get them and set them?

- Attributes allow you to associate arbitrary additional metadata to any object. You can get and set individual attributes with

attr(x, "y")andattr(x, "y") <- value;or get and set all attributes at once withattributes(x)(implemented as a list).

Q: How is a list different from an atomic vector? How is a matrix different from a data frame?

- The elements of a list can be any type (even a list); the elements of an atomic vector are all of the same type. Similarly, every element of a matrix must be the same type; in a data frame, the different columns can have different types.

Q: Can you have a list that is a matrix? Can a data frame have a column that is a matrix?

- You can make “list-array” by assuming dimensions to a list. You can make a matrix a column of a data frame with

df$x <- matrix(), or usingI()when creating a new data framedata.frame(x = I(matrix())).

1.2 Atomic Vectors

c() means “combine”.

# 你没看错,你没写小数点也默认是 double

dbl_var <- c(1, 2, 4)

# With the L suffix, you get an integer rather than a double

int_var <- c(1L, 6L, 10L)

# Use TRUE and FALSE (or T and F) to create logical vectors

log_var <- c(TRUE, FALSE, T, F)

# string vector

chr_var <- c("these are", "some strings")

Atomic vectors are always flat, even if you nest c()’s:

c(1, c(2, c(3, 4)))

#> [1] 1 2 3 4

除了 typeof(x) 外,还有:

is.character(x)is.double(x)is.integer(x)is.logical(x)is.numeric(x): 虽然一般管 double 叫 numeric,但是is.numeric(x) == is.double(x) || is.integer(x)- or, more generally,

is.atomic(x).

1.3 Lists

Lists are sometimes called recursive vectors, because a list can contain other lists. This makes them fundamentally different from atomic vectors.

x <- list(list(list(list())))

str(x)

#> List of 1

#> $ :List of 1

#> ..$ :List of 1

#> .. ..$ : list()

is.recursive(x)

#> [1] TRUE

c() will combine several lists into one. If given a combination of atomic vectors and lists, c() will coerce the vectors to lists before combining them.

x <- list(list(1, 2), c(3, 4))

y <- c(list(1, 2), c(3, 4))

str(x)

#> List of 2

#> $ :List of 2

#> ..$ : num 1

#> ..$ : num 2

#> $ : num [1:2] 3 4

str(y)

#> List of 4

#> $ : num 1

#> $ : num 2

#> $ : num 3

#> $ : num 4

Lists are used to build up many of the more complicated data structures in R.

is.list(mtcars)

#> [1] TRUE

mod <- lm(mpg ~ wt, data = mtcars)

is.list(mod)

#> [1] TRUE

注意:如果要把 list 转成 vector,应该用 unlist(x) 而不是 as.vector(x);unlist(x) 会得到一个 named vector,如果不要 name 的话,可以用 unname(unlist(x))。

1.4 Attributes

All objects can have arbitrary additional attributes, used to store metadata about the object. Attributes can be thought of as a named list (with unique names).

The structure() function returns a new object with modified attributes:

structure(1:10, my_attribute = "This is a vector")

#> [1] 1 2 3 4 5 6 7 8 9 10

#> attr(,"my_attribute")

#> [1] "This is a vector"

Note that some attributes (namely “class”, “comment”, “dim”, “dimnames”, “names”, “row.names” and “tsp”) are treated specially and have restrictions on the values which can be set.

The attributes hidden by attributes(x) are the three most important:

- “names”, a character vector giving each element a name.

- “dim”, used to turn vectors into matrices and arrays.

- “class”, used to implement the S3 object system.

Each of these three attributes has a specific accessor function to get and set values. When working with these attributes, use names(x), dim(x), and class(x), NOT attr(x, "names"), attr(x, "dim"), and attr(x, "class").

1.5 Factors

Factors are built on top of integer vectors using two attributes:

class(f) == “factor”, which makes them behave differently from regular integer vectors andlevels(f), which defines the set of allowed values.

1.6 Matrices and arrays

Adding a dim(x) attribute to an atomic vector x allows it to behave like a multi-dimensional array. A special case of the array is the matrix, which has two dimensions.

length(x)generalizes tonrow(x)andncol(x)for matrices, anddim(x)for arrays.names(x)generalizes torownames(x)andcolnames(x)for matrices, anddimnames(x), a list of character vectors, for arrays.c()generalizes tocbind()andrbind()for matrices, and toabind()(provided by theabindpackage) for arrays.- You can transpose a matrix with

t(); the generalized equivalent for arrays isaperm().

1.7 Data frames

Under the hood, a data frame is a list of equal-length vectors. This makes it a 2-dimensional structure, so it shares properties of both the matrix and the list.

- This means that a data frame has

names(),colnames(), andrownames(), althoughnames()andcolnames()are the same thing. - The

length()of a data frame is the length of the underlying list and so is the same asncol(); nrow()gives the number of rows.

Because a data.frame is an S3 class, its type reflects the underlying vector used to build it: the list. To check if an object is a data frame, use class() or test explicitly with is.data.frame():

typeof(df)

#> [1] "list"

class(df)

#> [1] "data.frame"

is.data.frame(df)

#> [1] TRUE

You can coerce an object to a data frame with as.data.frame():

- A vector will create a one-column data frame.

- A list will create one column for each element; it’s an error if they’re not all the same length.

- A matrix will create a data frame with the same number of columns and rows as the matrix.

It’s a common mistake to try and create a data frame by cbind()ing vectors together. This doesn’t work because cbind() will create a matrix unless one of the arguments is already a data frame. Instead use data.frame() directly.

2. Subsetting

It’s easiest to learn how subsetting works for atomic vectors, and then how it generalises to higher dimensions and other more complicated objects.

2.1 Quiz

Q: What is the result of subsetting a vector with positive integers, negative integers, a logical vector, or a character vector?

- Positive integers select elements at specific positions, negative integers drop elements; logical vectors keep elements at positions corresponding to TRUE; character vectors select elements with matching names.

Q: What’s the difference between [, [[, and $ when applied to a list?

[selects sub-lists. It always returns a list; if you use it with a single positive integer, it returns a list of length one.[[selects an element within a list.$is a convenient shorthand:x$yis equivalent tox[["y"]].

Q: When should you use drop = FALSE?

- 简单说就是:如果

drop = FALSE,你 subset 的输入和输出会保持同一类型,比如你 subset 一个 matrix,得到的结果还是 matrix,不会变成 vector

Q: If x is a matrix, what does x[] <- 0 do? How is it different to x <- 0?

- If

xis a matrix,x[] <- 0will replace every element with 0, keeping the same number of rows and columns. x <- 0completely replaces the matrix with the value 0.

Q: How can you use a named vector to relabel categorical variables?

- A named character vector can act as a simple lookup table:

c(x = 1, y = 2, z = 3)[c("y", "z", "x")]

2.2 Subsetting operator []

2.2.1 Subsetting atomic vectors

There are six things that you can use to subset a vector:

x <- c(2.1, 4.2, 3.3, 5.4)

# CASE 1. Positive integers, which return elements at the specified positions

x[c(3, 1)]

x[order(x)]

x[c(1, 1)] # Duplicated indices yield duplicated values

x[c(2.1, 2.9)] # Real numbers are silently truncated to integers

# CASE 2. Negative integers, which omit elements at the specified positions

x[-c(3, 1)]

x[c(-1, 2)] # ERROR. You can’t mix positive and negative integers in a single subset

# CASE 3. Logical vectors, which select elements where the corresponding logical value is TRUE

x[c(TRUE, TRUE, FALSE, FALSE)]

x[x > 3]

x[c(TRUE, FALSE)] # If the logical vector is shorter than the vector being subsetted, it will be recycled to be the same length.

x[c(TRUE, TRUE, NA, FALSE)] # A missing value in the index always yields a missing value in the output

# CASE 4. Nothing, which returns the original vector

# More useful for matrices, data frames, and arrays

x[]

# CASE 5. Zero, which returns a zero-length vector

x[0]

# CASE 6. Character vectors, which return elements with matching names

# Only if the vector is named

y <- setNames(x, letters[1:4]) # letters[1:4] = c("a", "b", "c", "d")

y[c("d", "c", "a")]

y[c("a", "a", "a")] # Like integer indices, you can repeat indices

CASE 4, “Subsetting with nothing” can be useful in conjunction with assignment because it will preserve the original object class and structure. Compare the following two expressions:

mtcars[] <- lapply(mtcars, as.integer) # mtcars will remain as a data frame

mtcars <- lapply(mtcars, as.integer) # mtcars will become a list

2.2.2 Subsetting lists

Subsetting a list works in the same way as subsetting an atomic vector. Using [ will always return a list.

2.2.3 Subsetting matrices and arrays

You can subset higher-dimensional structures in three ways:

- With multiple vectors.

- 以 matrix 为例就是

m[rows, cols]- 如果省略了

rows就表示 all rows - 如果省略了

cols就表示 all cols - 逗号不能省,否则就变成了 subsetting with a single vector

- 如果省略了

- 比如

m[1:2, 3:4]就是要 1-2 row, 3-4 col 一共 4 个元素

- 以 matrix 为例就是

- With a single vector.

- 以 matrix 为例就是

m[indices] - indices 是 column-wise 算的,比如一个 5x5 的 matrix,

- index=3 表示

m[3,1] - index=15 表示

m[5,3] m[c(3, 15)]就是把上面这两个元素都选出来

- index=3 表示

- 以 matrix 为例就是

- With a matrix.

- 以 matrix 为例就是

m[indices] - indices 是一个 2-column 的 matrix,每一 row 表示一个下标

- 比如 $ \begin{bmatrix}1 & 1 \newline 3 & 1 \newline 4 & 2 \end{bmatrix} $ 就可以取到

m[1,1],m[3,1],m[4,2]这三个元素

- 比如 $ \begin{bmatrix}1 & 1 \newline 3 & 1 \newline 4 & 2 \end{bmatrix} $ 就可以取到

- 以 matrix 为例就是

如果是 array 的话,上面这三种方法都需要扩展维数。

2.2.4 Subsetting data frames

Data frames possess the characteristics of both lists and matrices:

- if you subset with a single vector, they behave like lists;

- 而 list 和 vector 的逻辑是一样的

- if you subset with two vectors, they behave like matrices.

df <- data.frame(x = 1:3, y = 3:1, z = letters[1:3])

df[df$x == 2, ] # x=2 的 row

df[c(1, 3), ] # 1st and 3rd rows

# select multiple columns

# 这两种方法是等价的,而且返回结果都是 data frame

df[c("x", "z")]

df[, c("x", "z")]

# select single column

# 情况稍微有点不同

df["x"] # return a data frame

df[, "x"] # return a vector

2.3 Subsetting operator [[]]

[[ is similar to [, except it can only return a single value and it allows you to pull pieces out of a list.

You need [[ when working with lists. This is because when [ is applied to a list it always returns a list: it never gives you the contents of the list. To get the contents, you need [[.

Because it can return only a single value, you must use [[ with either a single positive integer or a string:

a <- list(a = 1, b = 2)

a[[1]]

#> [1] 1

a[["a"]]

#> [1] 1

# If you do supply a vector it indexes recursively

b <- list(a = list(b = list(c = list(d = 1))))

b[[c("a", "b", "c", "d")]]

#> [1] 1

# Same as

b[["a"]][["b"]][["c"]][["d"]]

#> [1] 1

Because data frames are lists of columns, you can use [[ to extract a column from data frames: mtcars[[1]], mtcars[["cyl"]].

Simplifying vs. preserving subsetting

- Simplifying subsets returns the simplest possible data structure that can represent the output, and is useful interactively because it usually gives you what you want.

- Preserving subsetting keeps the structure of the output the same as the input, and is generally better for programming because the result will always be the same type.

- Omitting

drop = FALSEwhen subsetting matrices and data frames is one of the most common sources of programming errors.

- Omitting

Unfortunately, how you switch between simplifying and preserving differs for different data types:

| Simplifying | Preserving | |

|---|---|---|

| Vector | x[[1]] |

x[1] |

| List | x[[1]] |

x[1] |

| Factor | x[1:4, drop = T] |

x[1:4] |

| Array | x[1, ] or x[, 1] |

x[1, , drop = F] or x[, 1, drop = F] |

| Data frame | x[, 1] or x[[1]] |

x[, 1, drop = F] or x[1] |

How does it simplify?

# CASE: atomic vector

# Remove names.

x <- c(a = 1, b = 2)

x[1]

#> a

#> 1

x[[1]]

#> [1] 1

# CASE: list

# Return the object inside the list, not a single element list.

# 例子略

# CASE: factor

# Drops any unused levels.

z <- factor(c("a", "b"))

z[1]

#> [1] a

#> Levels: a b

z[1, drop = TRUE]

#> [1] a

#> Levels: a

# CASE: matrix or array

# If any of the dimensions has length 1, drops that dimension.

a <- matrix(1:4, nrow = 2)

a[1, , drop = FALSE]

#> [,1] [,2]

#> [1,] 1 3

a[1, ]

#> [1] 1 3

# CASE: data frame

# If output is a single column, returns a vector instead of a data frame.

df <- data.frame(a = 1:2, b = 1:2)

str(df[1])

#> 'data.frame': 2 obs. of 1 variable:

#> $ a: int 1 2

str(df[[1]])

#> int [1:2] 1 2

str(df[, "a", drop = FALSE])

#> 'data.frame': 2 obs. of 1 variable:

#> $ a: int 1 2

str(df[, "a"])

#> int [1:2] 1 2

Out-of-bound indices

[ and [[ differ slightly in their behaviour when the index is out of bounds (OOB)。我们干脆总结得远一些:

| Operator | Index | Atomic | List |

|---|---|---|---|

[ |

OOB | NA |

list(NULL) |

[ |

NA_real_ |

NA |

list(NULL) |

[ |

NULL |

x[0] |

list(NULL) |

[[ |

OOB | Error | Error |

[[ |

NA_real_ |

Error | NULL |

[[ |

NULL |

Error | Error |

2.4 Subsetting operator $

$ is a shorthand operator, where x$y is equivalent to x[["y", exact = FALSE]]. It’s often used to access variables in a data frame, as in mtcars$cyl or diamonds$carat.

There’s one important difference between $ and [[. $ does partial matching:

x <- list(abc = 1)

x$a

#> [1] 1

x[["a"]]

#> NULL

2.5 Subsetting S3 and S4 objects

- S3 objects

- S3 objects are made up of atomic vectors, arrays, and lists, so you can always pull apart an S3 object using the techniques described above and the knowledge you gain from

str().

- S3 objects are made up of atomic vectors, arrays, and lists, so you can always pull apart an S3 object using the techniques described above and the knowledge you gain from

- S4 objects

- There are also two additional subsetting operators that are needed for S4 objects:

@(equivalent to$), and@is more restrictive than$in that it will return an error if the slot does not exist.

slot()(equivalent to[[).

- There are also two additional subsetting operators that are needed for S4 objects:

S3 and S4 objects can override the standard behaviour of [ and [[ so they behave differently for different types of objects.

2.6 Subsetting and assignment

就两个小地方注意下:

- With lists, you can use subsetting + assignment + NULL to remove components from a list.

- data frame 是 list of vectors,所以可以赋 NULL 来 remove 某个 column

- To add a literal NULL to a list, use

[andlist(NULL).

x <- list(a = 1, b = 2)

x[["b"]] <- NULL

str(x)

#> List of 1

#> $ a: num 1

y <- list(a = 1)

y["b"] <- list(NULL)

str(y)

#> List of 2

#> $ a: num 1

#> $ b: NULL

2.7 Applications

Lookup tables (character subsetting)

讲真,lookup 做 noun 的时候表示的是 looking something up 这样一个动作,所以把一个 var 命名为 lookup 我是有点难理解的;用 lookupTable 会好一点,但是要注意逻辑是 looking something up in this lookupTable。

Say you want to convert abbreviations:

x <- c("m", "f", "u", "f", "f", "m", "m")

lut <- c(m = "Male", f = "Female", u = NA)

lut[x]

#> m f u f f m m

#> "Male" "Female" NA "Female" "Female" "Male" "Male"

unname(lut[x])

#> [1] "Male" "Female" NA "Female" "Female" "Male" "Male"

# Or with fewer output values

lut2 <- c(m = "Known", f = "Known", u = "Unknown")

unname(lut2[x])

#>

#> [1] "Known" "Known" "Unknown" "Known" "Known" "Known" "Known"

Random samples/bootstrap (integer subsetting)

df <- data.frame(x = rep(1:3, each = 2), y = 6:1, z = letters[1:6])

# Set seed for reproducibility

set.seed(10)

# Randomly reorder

df[sample(nrow(df)), ]

# Select 3 random rows

df[sample(nrow(df), 3), ]

# Select 6 bootstrap replicates

df[sample(nrow(df), 6, rep = T), ]

Expanding aggregated counts (integer subsetting)

df <- data.frame(x = c(2, 4, 1), y = c(9, 11, 6), n = c(3, 5, 1))

rep(1:nrow(df), df$n)

#> [1] 1 1 1 2 2 2 2 2 3

df[rep(1:nrow(df), df$n), ]

#> x y n

#> 1 2 9 3

#> 1.1 2 9 3

#> 1.2 2 9 3

#> 2 4 11 5

#> 2.1 4 11 5

#> 2.2 4 11 5

#> 2.3 4 11 5

#> 2.4 4 11 5

#> 3 1 6 1

这里 n 是表示 count,比如 (x, y, n) = (2, 9, 3) 就表示 (x, y) = (2, 9) 的数据有 3 个。我们用上面的语句把这个 count 展开。

3. Functions

3.1 Function components

All R functions have three parts:

body(f), the code inside the function.formals(f), the list of formal arguments which controls how you can call the function.environment(f), the “map” of the location of the function’s variables.

f <- function(x) x^2

f

#> function(x) x^2

formals(f)

#> $x

body(f)

#> x^2

environment(f)

#> <environment: R_GlobalEnv>

Exception: Primitive functions

There is one exception to the rule that functions have three components. Primitive functions, like sum(), call C code directly with .Primitive() interface and contain no R code. Therefore their formals(), body(), and environment() are all NULL:

sum

#> function (..., na.rm = FALSE) .Primitive("sum")

formals(sum)

#> NULL

body(sum)

#> NULL

environment(sum)

#> NULL

Primitive functions are only found in the base package, and since they operate at a low level, they can be more efficient (primitive replacement functions don’t have to make copies), and can have different rules for argument matching (e.g., switch and call). This, however, comes at a cost of behaving differently from all other functions in R. Hence the R core team generally avoids creating them unless there is no other option.

# 见名知意

is.function(f)

is.primitive(f)

3.2 Lexical scoping

Scoping is the set of rules that govern how R looks up the value of a symbol. 比如我们有 x <- 10,那么 scoping is the set of rules that leads R to go from the symbol x to its value 10.

R has two types of scoping: lexical scoping, implemented automatically at the language level, and dynamic scoping, used in select functions to save typing during interactive analysis. We discuss lexical scoping here because it is intimately tied to function creation.

The “lexical” in lexical scoping doesn’t correspond to the usual English definition (“of or relating to words or the vocabulary of a language as distinguished from its grammar and construction”) but comes from the computer science term “lexing”, which is part of the process that converts code represented as text to meaningful pieces that the programming language understands.

Lexical scoping looks up symbol values based on how functions were nested when they were created, NOT how they are nested when they are called. With lexical scoping, you don’t need to know how the function is called to figure out where the value of a variable will be looked up. You just need to look at the function’s definition.

There are four basic principles behind R’s implementation of lexical scoping:

- name masking

- functions vs. variables

- a fresh start

- dynamic lookup

3.2.1 Name Masking

注:老实说这一节我不明白为啥叫 Name Masking,因为好像并没有讲 masking 啊……根据 How does R handle overlapping object names? 的说法:

Masking occurs when two or more packages have objects (such as functions) with the same name.

这个和我理解得一样。Anyway,以下是正文。

When there is a name in a function, R will look for the name’s definition inside the current function, then where that function was defined (maybe an outer function), and so on, all the way up to the global environment, and then on to other loaded packages.

x <- 1

h <- function() {

y <- 2

i <- function() {

z <- 3

c(x, y, z)

}

i()

}

h() # output: [1] 1 2 3

The same rules apply to closures, functions created by other functions. The following function, j(), returns a function:

j <- function(x) {

y <- 2

function() {

c(x, y)

}

}

k <- j(1)

k() # output: [1] 1 2

This seems a little magical. How does R know what the value of y is after the function has been called? It works because k preserves the environment in which it was defined and because the environment includes the value of y. Environments gives some pointers on how you can dive in and figure out what values are stored in the environment associated with each function.

3.2.2 Functions vs. variables

The same principles apply regardless of the type of associated value — finding functions works exactly the same way as finding variables.

However, there is one small tweak to the rule. If you are using a name in a context where it’s obvious that you want a function (e.g., f(3)), R will ignore objects that are not functions while it is searching. In the following example n takes on a different value depending on whether R is looking for a function or a variable.

n <- function(x) x / 2 # this n is a function

o <- function() {

n <- 10 # and this n is a variable

n(n) # WTF!

}

o() # output: [1] 5

However, using the same name for functions and other objects will make for confusing code, and is generally best avoided.

3.2.3 A fresh start

# exists("a") returns true if variable `a` exists.

j <- function() {

if (!exists("a")) {

a <- 1

} else {

a <- a + 1

}

print(a)

}

j()

j() returns the same value, 1, every time. This is because every time a function is called, a new environment is created to host execution. A function has no way to tell what happened the last time it was run (除非我们用 <<-); each invocation is completely independent.

3.2.4 Dynamic lookup

Lexical scoping determines where to look for values, not when to look for them. R looks for values when the function is run, not when it’s created (但查找还是先到 definition 里去查). This means that the output of a function can be different depending on objects outside its environment:

f <- function() x

x <- 15

f()

#> [1] 15

x <- 20

f()

#> [1] 20

You generally want to avoid this behaviour because it means the function is no longer self-contained. This is a common error — if you make a spelling mistake in your code, you won’t get an error when you create the function, and you might not even get one when you run the function, depending on what variables are defined in the global environment.

One way to detect this problem is the findGlobals() function from codetools. This function lists all the external dependencies of a function:

f <- function() x + 1

codetools::findGlobals(f)

#> [1] "+" "x"

Another way to try and solve the problem would be to manually change the environment of the function to the emptyenv(), an environment which contains absolutely nothing:

environment(f) <- emptyenv()

f()

#> Error in f(): could not find function "+"

However this hardly works because R relies on lexical scoping to find everything, even the + operator. It’s never possible to make a function completely self-contained because you must always rely on functions defined in base R or other packages.

3.3 Every operation is a function call

Great, the C++ way.

This includes infix operators like +, control flow operators like for, if, and while, subsetting operators like [] and $, and even the curly brace {.

Note that `, the backtick, lets you refer to functions or variables that have otherwise reserved or illegal names:

x <- 10; y <- 5

x + y

#> [1] 15

`+`(x, y)

#> [1] 15

for (i in 1:2) print(i)

#> [1] 1

#> [1] 2

`for`(i, 1:2, print(i))

#> [1] 1

#> [1] 2

x[3]

#> [1] NA

`[`(x, 3)

#> [1] NA

It is possible to override the definitions of these special functions, but you need to be careful.

It’s more often useful to treat special functions as ordinary functions. For example, we could use sapply() to add 3 to every element of a list by first defining a function add(), like this:

add <- function(x, y) x + y

sapply(1:10, add, 3)

#> [1] 4 5 6 7 8 9 10 11 12 13

But we can also get the same effect using the built-in + function:

sapply(1:5, `+`, 3)

#> [1] 4 5 6 7 8

# This works because sapply can use match.fun() to find functions given their names.

sapply(1:5, "+", 3)

#> [1] 4 5 6 7 8

A more useful application is to combine lapply() or sapply() with subsetting:

x <- list(1:3, 4:9, 10:12)

sapply(x, "[", 2)

#> [1] 2 5 11

# equivalent to

sapply(x, function(x) x[2])

#> [1] 2 5 11

3.4 Function arguments

3.4.1 Calling functions

When calling a function you can specify arguments by position, by complete name, or by partial name. Arguments are matched

- first by exact name (perfect matching),

- then by prefix matching,

- and finally by position.

- then by prefix matching,

If a function uses ..., you can only specify arguments listed after ... with their full name.

3.4.2 Calling a function given a list of arguments

Suppose you had a list of function arguments. How could you then send that list to mean()? You need do.call():

args <- list(1:10, na.rm = TRUE)

do.call(mean, args)

#> [1] 5.5

# Equivalent to

mean(1:10, na.rm = TRUE)

#> [1] 5.5

注意这里 na.rm 是 args 的一个元素,并不是 args 的一个参数。

3.4.3 Default and missing arguments

You can determine if an argument was supplied or not with the missing() function.

i <- function(a, b) {

c(missing(a), missing(b))

}

i()

#> [1] TRUE TRUE

i(a = 1)

#> [1] FALSE TRUE

i(b = 2)

#> [1] TRUE FALSE

i(1, 2)

#> [1] FALSE FALSE

3.4.4 Lazy evaluation

By default, R function arguments are lazy — they’re only evaluated if they’re actually used:

f <- function(x) {

10

}

f(stop("This is an error!"))

#> [1] 10

stop() is not used, so not evaluated (thus not executed).

If you want to ensure that an argument is evaluated you can use force():

f <- function(x) {

force(x)

10

}

f(stop("This is an error!"))

#> Error in force(x): This is an error!

This code is exactly equivalent to:

f <- function(x) {

x

10

}

f(stop("This is an error!"))

This is important when creating closures with lapply() or a loop:

add <- function(x) {

function(y) x + y

}

adders <- lapply(1:10, add)

adders[[1]](10)

#> [1] 20

adders[[10]](10)

#> [1] 20

因为 x 在 add 内没有值,所以最终 adders[[1]] 到 adders[[10]] 这 10 个函数用的都是外部的 x 值,而外部 x 的值是最终定格在 10 的(1:10),所以你调用任意的 adders[[n]](10) 都是执行 10+10 而不是 n+10。

正确的写法是:

add <- function(x) {

force(x)

function(y) x + y

}

adders2 <- lapply(1:10, add)

adders2[[1]](10)

#> [1] 11

adders2[[10]](10)

#> [1] 20

Default arguments are evaluated inside the function:

f <- function(x = ls()) {

a <- 1

x

}

# ls() evaluated inside f:

f()

#> [1] "a" "x"

More technically, an unevaluated argument is called a promise, or (less commonly) a thunk ([θʌŋk], a delayed computation). A promise is made up of two parts:

- The expression which gives rise to the delayed computation. (It can be accessed with

substitute().) - The environment where the expression was created and where it should be evaluated.

The first time a promise is accessed, the expression is evaluated in the environment where it was created. This value is cached, so that subsequent access to the evaluated promise does not recompute the value (but the original expression is still associated with the value, so substitute() can continue to access it). You can find more information about a promise using pryr::promise_info(). This uses some C++ code to extract information about the promise without evaluating it, which is impossible to do in pure R code.

3.4.5 Variable argument list ...

- ellipsis: [ɪˈlɪpsɪs], 省略号

To capture ... in a form that is easier to work with, you can use list(...):

f <- function(...) {

names(list(...))

}

f(a = 1, b = 2)

#> [1] "a" "b"

It’s often better to be explicit rather than implicit, so you might instead ask users to supply a list of additional arguments. That’s certainly easier if you’re trying to use ... with multiple additional functions.

3.5 Special calls

R supports two additional syntaxes for calling special types of functions:

- infix and

- replacement functions.

3.5.1 Infix functions

Most functions in R are “prefix” operators: the name of the function comes before the arguments. You can also create infix functions where the function name comes in between its arguments, like + or -. All user-created infix functions must start and end with %. R comes with the following infix functions predefined: % %, %*%, %/%, %in%, %o%, %x%.

For example, we could create a new operator that pastes together strings:

`%+%` <- function(a, b) paste0(a, b)

"new" %+% " string"

#> [1] "new string"

3.5.2 Replacement functions

Replacement functions act like they modify their arguments in place, and have the special name xxx<-. They typically have two arguments (x and value), although they can have more, and they must return the modified object. For example, the following function allows you to modify the second element of a vector:

`second<-` <- function(x, value) {

x[2] <- value

x

}

x <- 1:10

second(x) <- 5L

x

#> [1] 1 5 3 4 5 6 7 8 9 10

I say they “act” like they modify their arguments in place, because they actually create a modified copy. We can see that by using pryr::address() to find the memory address of the underlying object.

library(pryr)

x <- 1:10

address(x) # 类似 C 的 &x

#> [1] "0x433fdf0"

second(x) <- 6L

address(x)

#> [1] "0x4305078"

Built-in functions that are implemented using .Primitive() will modify in place:

x <- 1:10

address(x)

#> [1] "0x103945110"

x[2] <- 7L

address(x)

#> [1] "0x103945110"

It’s important to be aware of this behaviour since it has important performance implications.

If you want to supply additional arguments, they go in between x and value:

`modify<-` <- function(x, position, value) {

x[position] <- value

x

}

modify(x, 1) <- 10

x

#> [1] 10 6 3 4 5 6 7 8 9 10

When you call modify(x, 1) <- 10, behind the scenes R turns it into x <- `modify<-`(x, 1, 10). This means you CANNOT do things like: modify(get("x"), 1) <- 10 because that gets turned into the invalid code: get("x") <- `modify<-`(get("x"), 1, 10).

3.6 Return values

The last expression evaluated in a function becomes the return value, or you can use an explicit return().

The functions that are the easiest to understand and reason about are pure functions: functions that always map the same input to the same output and have no other impact on the workspace. In other words, pure functions have no side effects: they don’t affect the state of the world in any way apart from the value they return.

R protects you from one type of side effect: most R objects have copy-on-modify semantics. So modifying a function argument does not change the original value. (也就是传说中的 pass-by-value)

Most base R functions are pure, with a few notable exceptions:

library()which loads a package, and hence modifies the search path.setwd(),Sys.setenv(),Sys.setlocale()which change the working directory, environment variables, and the locale, respectively.plot()and friends which produce graphical output.write(),write.csv(),saveRDS(), etc. which save output to disk.options()andpar()which modify global settings.- S4 related functions which modify global tables of classes and methods.

- Random number generators which produce different numbers each time you run them.

3.6.1 Invisible

Functions can return invisible values, which are not printed out by default when you call the function.

f1 <- function() 1

f2 <- function() invisible(1)

f1()

#> [1] 1

f2()

f1() == 1

#> [1] TRUE

f2() == 1

#> [1] TRUE

You can force an invisible value to be displayed by wrapping it in parentheses:

(f2())

#> [1] 1

The most common function that returns invisibly is <-:

a <- 2

(a <- 2)

#> [1] 2

3.6.2 On exit

As well as returning a value, functions can set up other triggers to occur when the function is finished using on.exit(). This is often used as a way to guarantee that changes to the global state are restored when the function exits. The code in on.exit() is run regardless of how the function exits, whether with an return, an error, or simply reaching the end of the function body.

in_dir <- function(dir, code) {

old <- setwd(dir)

on.exit(setwd(old))

force(code)

}

getwd()

#> [1] "/home/travis/build/hadley/adv-r"

in_dir("~", getwd())

#> [1] "/home/travis"

Caution: If you’re using multiple on.exit() calls within a function, make sure to set add = TRUE. Unfortunately, the default in on.exit() is add = FALSE, so that every time you run it, it overwrites existing exit expressions.

4. OO field guide

R has three object oriented systems, S3, S4, “Reference Classes” (S5), and a “Base Types” system.

- Base types, the internal C-level types that underlie the other OO systems. Base types are mostly manipulated using C code, but they’re important to know about because they provide the building blocks for the other OO systems.

- S3 implements a style of OO programming called generic-function OO. This is different from most programming languages, like Java, C++, and C#, which implement message-passing OO.

- With message-passing, objects usually appear before the name of the method/message: e.g.,

canvas.drawRect("blue"). - S3 is different. While computations are still carried out via methods, a special type of function called a generic function decides which method to call, e.g., drawRect(canvas, “blue”).

- S3 is a very casual system. It has no formal definition of classes.

- With message-passing, objects usually appear before the name of the method/message: e.g.,

- S4 works similarly to S3, but is more formal. There are two major differences to S3:

- S4 has formal class definitions, which describe the representation and inheritance for each class, and has special helper functions for defining generics and methods.

- S4 also has multiple dispatch, which means that generic functions can pick methods based on the class of any number of arguments, not just one.

- Reference classes, called RC for short, are quite different from S3 and S4. RC implements message-passing OO, so methods belong to classes, not functions. $ is used to separate objects and methods, so method calls look like

canvas$drawRect("blue"). RC objects are also mutable: they don’t use R’s usual copy-on-modify semantics, but are modified in place. This makes them harder to reason about, but allows them to solve problems that are difficult to solve with S3 or S4.

In the examples below, you’ll need install.packages("pryr"), to access useful functions for examining OO properties.

4.1 Quiz

Q: How do you tell what OO system (base, S3, S4, or RC) an object is associated with?

- If

!is.object(x), it’s a base object.- If

!isS4(x), it’s S3.- If

!is(x, "refClass"), it’s S4;- otherwise it’s RC.

- If

- If

Q: How do you determine the base type (like integer or list) of an object?

- Use

typeof()to determine the base class of an object.

Q: What is a generic function?

- A generic function calls specific methods depending on the class of it inputs. In S3 and S4 object systems, methods belong to generic functions, not classes like in other programming languages.

4.2 Base types

Underlying every R object is a C structure (or struct) that describes how that object is stored in memory. The struct includes the contents of the object, the information needed for memory management, and, most importantly for this section, a type. This is the base type of an R object. Base types are not really an object system because only the R core team can create new types. As a result, new base types are added very rarely.

Data structures section explains the most common base types (atomic vectors and lists), but base types also encompass functions, environments, and other more exotic objects likes names, calls, and promises.

You can determine an object’s base type with typeof(). Unfortunately the names of base types are not used consistently throughout R:

# The type of a function is "closure"

f <- function() {}

typeof(f)

#> [1] "closure"

is.function(f)

#> [1] TRUE

# The type of a primitive function is "builtin"

typeof(sum)

#> [1] "builtin"

is.primitive(sum)

#> [1] TRUE

Functions that behave differently for different base types are almost always written in C, where dispatch occurs using switch statements (e.g., switch(TYPEOF(x))).

4.3 S3

4.3.1 Recognising objects, generic functions, and methods

Most objects that you encounter are S3 objects. But unfortunately there’s no simple way to test if an object is an S3 object in base R. The closest you can come is is.object(x) & !isS4(x), i.e., it’s an object, but not S4. An easier way is to use pryr::otype():

library(pryr)

df <- data.frame(x = 1:10, y = letters[1:10])

otype(df) # A data frame is an S3 class

#> [1] "S3"

otype(df$x) # A numeric vector isn't

#> [1] "base"

otype(df$y) # A factor is

#> [1] "S3"

In S3, methods belong to functions, called generic functions, or generics for short. To determine if a function is an S3 generic, you can inspect its source code for a call to UseMethod(): that’s the function that figures out the correct method to call, the process of method dispatch. pryr also provides ftype() which describes the object system, if any, associated with a function:

mean

#> function (x, ...)

#> UseMethod("mean")

#> <bytecode: 0x2bc14a0>

#> <environment: namespace:base>

ftype(mean)

#> [1] "s3" "generic"

Some S3 generics, like [, sum(), and cbind(), don’t call UseMethod() because they are implemented in C. Instead, they call the C functions DispatchGroup() or DispatchOrEval(). Functions that do method dispatch in C code are called internal generics and are documented in ?"internal generic". ftype() knows about these special cases too.

Given a class, the job of an S3 generic is to call the right S3 method. You can recognise S3 methods by their names, which look like generic.class(). For example, the Date method for the mean() generic is called mean.Date(), and the factor method for print() is called print.factor().

This is the reason that most modern style guides discourage the use of . in function names: it makes them look like S3 methods.

ftype(t.data.frame) # data frame method for t()

#> [1] "s3" "method"

ftype(t.test) # generic function for t tests

#> [1] "s3" "generic"

You can see all the methods that belong to a generic with methods():

methods("mean")

#> [1] mean.Date mean.default mean.difftime mean.POSIXct mean.POSIXlt

methods("t.test")

#> [1] t.test.default* t.test.formula*

#>

#> Non-visible functions are asterisked

Apart from methods defined in the base package, most S3 methods will not be visible: use getS3method() to read their source code.

You can also list all generics that have a method for a given class:

methods(class = "ts")

#> [1] aggregate.ts as.data.frame.ts cbind.ts* cycle.ts*

#> [5] diffinv.ts* diff.ts* kernapply.ts* lines.ts*

#> [9] monthplot.ts* na.omit.ts* Ops.ts* plot.ts

#> [13] print.ts* time.ts* [<-.ts* [.ts*

#> [17] t.ts* window<-.ts* window.ts*

#>

#> Non-visible functions are asterisked

4.3.2 Defining classes and creating objects

S3 is a simple and ad hoc system; it has no formal definition of a class. To make an object an instance of a class, you just take an existing base object and set the class attribute. You can do that during creation with structure(), or after the fact with class<-():

# Create and assign class in one step

foo <- structure(list(), class = "foo")

# Create, then set class

foo <- list()

class(foo) <- "foo"

You can determine the class of any object using class(x), and see if an object inherits from a specific class using inherits(x, “classname”).

class(foo)

#> [1] "foo"

inherits(foo, "foo")

#> [1] TRUE

S3 objects are usually built on top of lists, or atomic vectors with attributes. You can also turn functions into S3 objects.

The class of an S3 object can be a vector, which describes behaviour from most to least specific. For example, the class of the glm() object is c("glm", "lm") indicating that generalised linear models inherit behaviour from linear models.

Most S3 classes provide a constructor function. This ensures that you’re creating the class with the correct components. Constructor functions usually have the same name as the class (like factor() and data.frame()).

foo <- function(x) {

if (!is.numeric(x)) stop("X must be numeric")

structure(list(x), class = "foo")

}

Apart from developer supplied constructor functions, S3 has no checks for correctness. This means you can change the class of existing objects. If you’ve used other OO languages, this might make you feel queasy ([ˈkwi:zi], having an unpleasantly nervous or doubtful feeling).

4.3.3 Creating new methods and generics

一般的模式是这样的:

f <- function(x) UseMethod("f") # dispatch on the function name "f"

objA <- structure(list(), class = "a") # we define an object, `objA`, of class `a`

f.a <- function(x) { # f.a 专门负责 class 为 `a` 的参数

# code goes here

}

f(objA) # dispatched to f.a(objA)

其中 f.a 是一个 method,然后 f 是一个 generic。

Adding a method to an existing generic works in the same way:

mean.a <- function(x) {

# code goes here

}

mean(objA) # dispatched to mean.a(objA)

A default class makes it possible to set up a fall back method for otherwise unknown classes:

f.default <- function(x) {

# code goes here

}

objB <- structure(list(), class = "b")

f(objB) # dispatched to f.default(objB) because we don't have `f.b(x)`

4.4 S4

S4 works in a similar way to S3, but it adds formality and rigour. Methods still belong to functions, not classes, but:

- Classes have formal definitions which describe their fields and inheritance structures (parent classes).

- Method dispatch can be based on multiple arguments to a generic function, not just one.

- There is a special operator,

@, for extracting slots (aka fields) from an S4 object.

All S4 related code is stored in the methods package. This package is always available when you’re running R interactively, but may not be available when running R in batch mode. For this reason, it’s a good idea to include an explicit library(methods) whenever you’re using S4.

4.4.1 Recognising objects, generic functions, and methods

You can identify an S4 object if:

str()describes it as a “formal” class,isS4()returns TRUE, orpryr::otype()returns “S4”.

4.4.2 Defining classes and creating objects

In S3, you can turn any object into an object of a particular class just by setting the class attribute. S4 is much stricter: you must define the representation of a class with setClass(), and create a new object with new(). You can find the documentation for a class with a special syntax: class?className, e.g., class?mle.

An S4 class has three key properties:

- A name: an alpha-numeric class identifier. By convention, S4 class names use

UpperCamelCase. - A named list of slots (fields), which defines slot names and permitted classes.

- For example, a person class might be represented by a character name and a numeric age:

list(name = "character", age = "numeric").

- For example, a person class might be represented by a character name and a numeric age:

- A string giving the class it inherits from, or, in S4 terminology, that it contains. You can provide multiple classes for multiple inheritance, but this is an advanced technique which adds much complexity.

S4 classes have other optional properties like a validity method that tests if an object is valid, and a prototype object that defines default slot values. See ?setClass for more details.

The following example creates a Person class with fields name and age, and an Employee class that inherits from Person. The Employee class inherits the slots and methods from the Person, and adds an additional slot, boss. To create objects we call new() with the name of the class, and name-value pairs of slot values.

setClass("Person",

slots = list(name = "character", age = "numeric"))

setClass("Employee",

slots = list(boss = "Person"),

contains = "Person")

alice <- new("Person", name = "Alice", age = 40)

john <- new("Employee", name = "John", age = 20, boss = alice)

Most S4 classes also come with a constructor function with the same name as the class: if that exists, use it instead of calling new() directly.

To access slots of an S4 object use @ or slot() (@ is equivalent to $, and slot() to [[.):

alice@age

#> [1] 40

slot(john, "boss")

#> An object of class "Person"

#> Slot "name":

#> [1] "Alice"

#>

#> Slot "age":

#> [1] 40

If an S4 object contains (inherits from) an S3 class or a base type, it will have a special .Data slot which contains the underlying base type or S3 object.

4.4.3 Creating new methods and generics

S4 provides special functions for creating new generics and methods. setGeneric() creates a new generic or converts an existing function into a generic. setMethod() takes the name of the generic, the classes the method should be associated with, and a function that implements the method:

setGeneric("union")

#> [1] "union"

setMethod("union",

c(x = "data.frame", y = "data.frame"),

function(x, y) {

unique(rbind(x, y))

}

)

#> [1] "union"

If you create a new generic from scratch, you need to supply a function that calls standardGeneric():

setGeneric("myGeneric", function(x) {

standardGeneric("myGeneric")

})

#> [1] "myGeneric"

standardGeneric() is the S4 equivalent to UseMethod().

4.5 RC

They are fundamentally different to S3 and S4 because:

- RC methods belong to objects, not functions

- RC objects are mutable: the usual R copy-on-modify semantics do not apply

These properties make RC objects behave more like objects do in most other programming languages, e.g., Python, Ruby, Java, and C#. Reference classes are implemented using R code: they are a special S4 class that wraps around an environment.

4.5.1 Defining classes and creating objects

Account <- setRefClass("Account",

fields = list(balance = "numeric"))

a <- Account$new(balance = 100)

a$balance

#> [1] 100

a$balance <- 200

a$balance

#> [1] 200

Note that RC objects are mutable, i.e., they have reference semantics, and are not copied-on-modify:

b <- a

b$balance

#> [1] 200

a$balance <- 0

b$balance

#> [1] 0

嗯,这真真是 reference。

For this reason, RC objects come with a copy() method that allow you to make a copy of the object:

c <- a$copy()

c$balance

#> [1] 0

a$balance <- 100

c$balance

#> [1] 0

RC methods are associated with a class and can modify its fields in place. In the following example, note that you access the value of fields with their name, and modify them with <<-:

Account <- setRefClass("Account",

fields = list(balance = "numeric"),

methods = list(

withdraw = function(x) {

balance <<- balance - x

},

deposit = function(x) {

balance <<- balance + x

}

)

)

The final important argument to setRefClass() is contains. This is the name of the parent RC class to inherit behaviour from. The following example creates a new type of bank account that returns an error preventing the balance from going below 0:

NoOverdraft <- setRefClass("NoOverdraft",

contains = "Account",

methods = list(

withdraw = function(x) {

if (balance < x) stop("Not enough money")

balance <<- balance - x

}

)

)

All reference classes eventually inherit from envRefClass. It provides useful methods like

copy(),callSuper()(to call the parent field),field()(to get the value of a field given its name),export()(equivalent toas()), andshow()(overridden to control printing).

See the inheritance section in setRefClass() for more details.

4.5.2 Recognising objects and methods

You can recognise RC objects if they are S4 objects (isS4(x)) that inherit from “refClass” (is(x, "refClass")). pryr::otype() will return “RC”. RC methods are also S4 objects, with class refMethodDef.

4.5.3 Method dispatch

When you call x$f(), R will look for a method f in the class of x, then in its parent, then its parent’s parent, and so on. From within a method, you can call the parent method directly with callSuper().

4.6 Picking a system

- In R you usually create fairly simple objects and methods for pre-existing generic functions like

print(),summary(), andplot(). S3 is well suited to this task, and the majority of OO code that I have written in R is S3. S3 is a little quirky, but it gets the job done with a minimum of code. - If you are creating more complicated systems of interrelated objects, S4 may be more appropriate. A good example is the

Matrixpackage by Douglas Bates and Martin Maechler. It is designed to efficiently store and compute with many different types of sparse matrices. S4 is also used extensively byBioconductorpackages, which need to model complicated interrelationships between biological objects (BTW,Bioconductorprovides many good resources for learning S4.). - If you’ve programmed in a mainstream OO language, RC will seem very natural. But because they can introduce side effects through mutable state.

5. Environments

- The environment is the data structure that powers scoping.

- Environments can also be useful data structures in their own right because they have reference semantics. When you modify a binding in an environment, the environment is not copied; it’s modified in place.

5.1 Quiz

Q: List at least three ways that an environment is different to a list.

- There are four ways: every object in an environment must have a name; order doesn’t matter; environments have parents; environments have reference semantics.

Q: What is the parent of the global environment? What is the only environment that doesn’t have a parent?

- The parent of the global environment is the last package that you loaded.

- The only environment that doesn’t have a parent is the empty environment.

Q: What is the enclosing environment of a function? Why is it important?

- The enclosing environment of a function is the environment where it was created. It determines where a function looks for variables.

Q: How are <- and <<- different?

<-always creates a binding in the current environment;<<-rebinds an existing name in a parent of the current environment.

5.2 Environment basics

The job of an environment is to associate, or bind, a set of names to a set of values. Each name points to an object stored elsewhere in memory:

e <- new.env()

e$a <- FALSE

e$b <- "a"

e$c <- 2.3

e$d <- 1:3

e$a <- e$d # a 和 d 同时指向同一组 1:3

e$a <- 1:3 # 此时 a 指向一组新的 1:3

If an object has no names pointing to it, it gets automatically deleted by the garbage collector.

Every environment has a parent, another environment. The parent is used to implement lexical scoping: if a name is not found in an environment, then R will look in its parent (and so on). Only one environment doesn’t have a parent: the empty environment.

More technically, an environment is made up of two components:

- the frame, which contains the name-object bindings, and

- the parent environment.

Unfortunately “frame” is used inconsistently in R. For example, parent.frame() doesn’t give you the parent frame of an environment. Instead, it gives you the calling environment.

There are four special environments:

- The

globalenv(), or global environment, is the interactive workspace. This is the environment in which you normally work. The parent of the global environment is the last package that you attached withlibrary()orrequire(). - The

baseenv(), or base environment, is the environment of thebasepackage. Its parent is the empty environment. - The

emptyenv(), or empty environment, is the ultimate ancestor of all environments, and the only environment without a parent. - The

environment()is the current environment.

search() lists all parents of the global environment. This is called the search path because objects in these environments can be found from the top-level interactive workspace. It contains one environment for each attached package and any other objects that you’ve attach()ed. It also contains a special environment called Autoloads which is used to save memory by only loading package objects (like big datasets) when needed.

You can access any environment on the search list using as.environment().

search()

#> [1] ".GlobalEnv" "package:stats" "package:graphics"

#> [4] "package:grDevices" "package:utils" "package:datasets"

#> [7] "package:methods" "Autoloads" "package:base"

as.environment("package:stats")

#> <environment: package:stats>

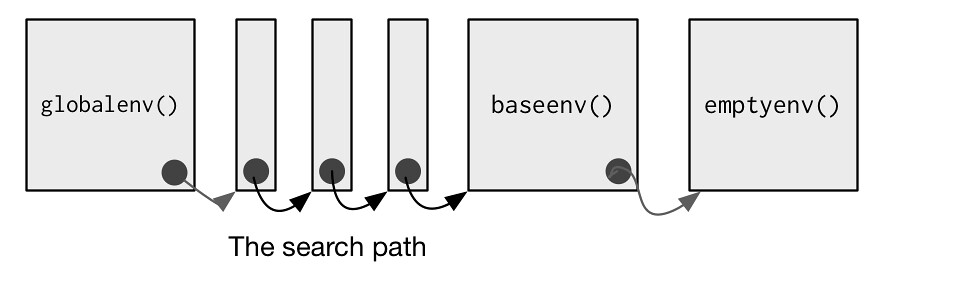

globalenv(), baseenv(), the environments on the search path, and emptyenv() are connected as shown below. Each time you load a new package with library() it is inserted between the global environment and the package that was previously at the button of the search path.

To create an environment manually, use new.env():

e <- new.env()

# the default parent provided by new.env() is environment from

# which it is called - in this case that's the global environment.

parent.env(e)

#> <environment: R_GlobalEnv>

e$a <- 1

e$b <- 2

e$.a <- 2

ls(e)

#> [1] "a" "b"

ls(e, all.names = TRUE)

#> [1] "a" ".a" "b"

str(e)

#> <environment: 0x122f450>

ls.str(e)

#> a : num 1

#> b : num 2

ls.str(e, all.names = TRUE)

#> .a : num 2

#> a : num 1

#> b : num 2

e$c <- 3

e$c

#> [1] 3

e[["c"]]

#> [1] 3

get("c", envir = e) # get() uses the regular scoping rules and throws an error if the binding is not found

#> [1] 3

rm(".a", envir = e) # e$.a <- NULL 并不能 remove 掉 .a

x <- 10

exists("x", envir = e)

#> [1] TRUE

exists("x", envir = e, inherits = FALSE)

#> [1] FALSE

# To compare environments, you must use identical() not ==:

identical(globalenv(), environment())

#> [1] TRUE

globalenv() == environment()

#> Error in globalenv() == environment(): comparison (1) is possible only for atomic and list types

5.3 Recursing over environments

library(pryr)

x <- 5

where("x")

#> <environment: R_GlobalEnv>

where("mean")

#> <environment: base>

where 的实现大概是这样的:

where <- function(name, env = parent.frame()) {

if (identical(env, emptyenv())) {

# Base case

stop("Can't find ", name, call. = FALSE)

} else if (exists(name, envir = env, inherits = FALSE)) {

# Success case

env

} else {

# Recursive case

where(name, parent.env(env))

}

}

5.4 Function environments

- The enclosing environment is the environment where the function was created.

- Every function has one and only one enclosing environment.

- Binding a function to a name with

<-defines a binding environment. - Calling a function creates an ephemeral ([ɪˈfemərəl], lasting a very short time) execution environment that stores variables created during execution.

- Every execution environment is associated with a calling environment, which tells you where the function was called.

- For the 3 types of environment above, there may be 0, 1, or many environments associated with each function.

5.4.1 The enclosing environment

When a function is created, it gains a reference to the environment where it was made. This is the enclosing environment and is used for lexical scoping. You can determine the enclosing environment of a function by calling environment(f):

y <- 1

f <- function(x) x + y

environment(f)

#> <environment: R_GlobalEnv>

5.4.2 Binding environments

The name of a function is defined by a binding. The binding environments of a function are all the environments which have a binding to it.

e <- new.env()

e$g <- function() 1

- 所谓 binding 就是

foo <- bar这么一个赋值。binding environment 最简单的判断方法就是:变量(函数名)在哪个 environment,哪个 environment 就是 binding environment。比如上面的e$g <- function,g在e内部,所以 binding environment 就是e - 而

e$g的 enclosing environment 是 global,因为function的定义是写在当前 workspace 的。实在吃不准的时候别忘了可以用environment(e$g) - The enclosing environment belongs to the function, and never changes, even if the function is moved to a different environment.

- The enclosing environment determines how the function finds values;

- the binding environments determine how we find the function.

Namespaces are implemented using environments, taking advantage of the fact that functions don’t have to live in their enclosing environments. For example, take the base function sd(). It’s binding and enclosing environments are different:

environment(sd)

#> <environment: namespace:stats>

where("sd")

#> <environment: package:stats>

The definition of sd() uses var(), but if we make our own version of var() it doesn’t affect sd():

x <- 1:10

sd(x)

#> [1] 3.02765

var <- function(x, na.rm = TRUE) 100

sd(x)

#> [1] 3.02765

This works because every package has two environments associated with it: the package environment and the namespace environment.

- The package environment contains every publicly accessible function, and is placed on the search path.

- The namespace environment contains all functions (including internal functions), and its parent environment is a special imports environment that contains bindings to all the functions that the package needs.

- Every exported function in a package is bound into the package environment, but enclosed by the namespace environment.

When we type var into the console, it’s found first in the global environment. When sd() looks for var() it finds it first in its namespace environment so never looks in the globalenv().

5.4.3 Execution environments

Each time a function is called, a new environment is created to host execution. Once the function has completed, this environment is thrown away.

5.4.4 Calling environments

f2 <- function() {

x <- 5

function() {

innerX <- get("x", environment())

outerX <- get("x", parent.frame())

list(outerX = outerX, innerX = innerX, x = x)

}

}

g2 <- f2()

x <- 47

str(g2())

#> List of 3

#> $ outerX: num 47

#> $ innerX: num 5

#> $ x : num 5

identical(parent.env(environment(g2)), environment(f2))

#> [1] TRUE

Note that each execution environment has two parents: a calling environment and an enclosing environment. R’s regular scoping rules only use the enclosing parent; parent.frame() allows you to access the calling parent.

Looking up variables in the calling environment rather than in the enclosing environment is called dynamic scoping. Dynamic scoping is primarily useful for developing functions that aid interactive data analysis. It is one of the topics discussed in “non-standard evaluation”.

5.4.5 Summary

总结一下:

- Enclosing environment: 函数定义所在的 environment

- 获取方法:

- 在函数

f外部调用environment(f) - 在函数内部调用

parent.env(environment())

- 在函数

- The enclosing environment belongs to the function, and never changes.

- 获取方法:

- Binding environment:函数名所在的 environment

- 比如

e$g <- function() {},binding environment 就是e

- 比如

- Execution environment:函数执行创建的 envrionment

- 获取方法:函数内部调用

environment()(不带参数,表示 to get current environment) - Execution environment 有两个 parents:

- Enclosing environment

- Calling environment

- 如果在 Execution environment 里找不到 variable,scoping 规定会去 Enclosing environment 里去找,而不会去 Calling environment

- 获取方法:函数内部调用

- Calling environment:the environment from which a function is called

- 获取方法:函数内部调用

parent.frame()(不带参数)

- 获取方法:函数内部调用

实验:

f <- function() {

print(environment())

function() {

print(environment())

print(parent.frame())

print(parent.env(environment()))

}

}

g <- f()

#> <environment: 0x0000000008175e28> # SAME

g()

#> <environment: 0x0000000008181d68>

#> <environment: R_GlobalEnv>

#> <environment: 0x0000000008175e28> # SAME

5.5 Binding names to values

- The regular assignment arrow,

<-, always creates a variable in the current environment. - The deep assignment arrow,

<<-, never creates a variable in the current environment, but instead modifies an existing variable found by walking up the parent environments.- If

<<-doesn’t find an existing variable, it will create one in the global environment. - You can also do deep binding with

assign():name <<- valueis equivalent toassign("name", value, inherits = TRUE).

- If

There are two other special types of binding:

- A delayed binding creates and stores a promise to evaluate the expression when needed.

- We can create delayed bindings with the special assignment operator

%<d-%, provided by thepryrpackage. %<d-%is a wrapper around the basedelayedAssign()function.

- We can create delayed bindings with the special assignment operator

- An active binding does not bound to a constant object. Instead, they’re re-computed every time they’re accessed.

- We can create active bindings with the special assignment operator

%<a-%, provided by thepryrpackage. %<a-%is a wrapper for the base functionmakeActiveBinding().

- We can create active bindings with the special assignment operator

library(pryr)

x %<d-% (a + b)

a <- 10

b <- 100

x

#> [1] 110

set.seed(47)

x %<a-% runif(1)

x

#> [1] 0.976962

x

#> [1] 0.373916

5.6 Using environments explicitly

Environments are also useful data structures in their own right because they have reference semantics.

modify <- function(x) {

x$a <- 2

invisible()

}

# CASE 1: pass by value

x_l <- list()

x_l$a <- 1

modify(x_l)

x_l$a

#> [1] 1

# CASE 2: pass by reference

x_e <- new.env()

x_e$a <- 1

modify(x_e)

x_e$a

#> [1] 2

When creating your own environment, note that you should set its parent environment to be the empty environment. This ensures you don’t accidentally inherit objects from somewhere else:

x <- 1

e1 <- new.env()

get("x", envir = e1)

#> [1] 1

e2 <- new.env(parent = emptyenv())

get("x", envir = e2)

#> Error in get("x", envir = e2): object 'x' not found

Environments are data structures useful for solving three common problems:

- Avoiding copies of large data.

- Managing state within a package.

- Efficiently looking up values from names.

5.6.1 Avoiding copies

Since environments have reference semantics, you’ll never accidentally create a copy. This makes it a useful vessel for large objects. It’s a common technique for bioconductor packages which often have to manage large genomic objects. Changes to R 3.1.0 have made this use substantially less important because modifying a list no longer makes a deep copy. Previously, modifying a single element of a list would cause every element to be copied, an expensive operation if some elements are large. Now, modifying a list efficiently reuses existing vectors, saving much time.

5.6.2 Package state

Explicit environments are useful in packages because they allow you to maintain state across function calls. Normally, objects in a package are locked, so you can’t modify them directly. Instead, you can do something like this:

my_env <- new.env(parent = emptyenv())

my_env$a <- 1

get_a <- function() {

my_env$a

}

set_a <- function(value) {

old <- my_env$a

my_env$a <- value

invisible(old)

}

5.6.3 As a hashmap

A hashmap is a data structure that takes constant, O(1), time to find an object based on its name. Environments provide this behaviour by default, so can be used to simulate a hashmap.

6. Debugging, condition handling, and defensive programming

详细内容见 原地址。实际操作时再来看学得更快。另外还有

Conditions include:

- Errors raised by

stop() - Warnings raised by

warning() - Messages raised by

message()

Condition handling tools, like withCallingHandlers(), tryCatch(), and try() allow you to take specific actions when a condition occurs.

6.1 Condition handling

6.1.1 Ignore errors with a single try()

try() allows execution to continue even after an error has occurred. If you wrap the statement that creates the error in try(), the error message will be printed but execution will continue

f1 <- function(x) {

log(x)

10

}

f1("x")

#> Error in log(x): non-numeric argument to mathematical function

f2 <- function(x) {

try(log(x))

10

}

f2("a")

#> Error in log(x) : non-numeric argument to mathematical function

#> [1] 10

You can suppress the message with try(..., silent = TRUE).

To pass larger blocks of code to try(), wrap them in {}:

try({

a <- 1

b <- "x"

a + b

})

You can also capture the output of the try() function. If successful, it will be the last result evaluated in the block (just like a function). If unsuccessful it will be an (invisible) object of class try-error:

success <- try(1 + 2)

failure <- try("a" + "b")

class(success)

#> [1] "numeric"

class(failure)

#> [1] "try-error"

try() is particularly useful when you’re applying a function to multiple elements in a list. 这样把每个元素都过一遍,出错了也不要紧,最后的结果我们过滤掉 try-error 就好了:

elements <- list(1:10, c(-1, 10), c(T, F), letters)

results <- lapply(elements, log) # 不用 try 的话会中断执行

#> Warning in lapply(elements, log): NaNs produced

#> Error in FUN(X[[4L]], ...): non-numeric argument to mathematical function

results <- lapply(elements, function(x) try(log(x)))

#> Warning in log(x): NaNs produced

# filter 函数:return TRUE if x inherits from "try-error"

is.error <- function(x) inherits(x, "try-error")

succeeded <- !sapply(results, is.error)

# look at successful results

str(results[succeeded])

#> List of 3

#> $ : num [1:10] 0 0.693 1.099 1.386 1.609 ...

#> $ : num [1:2] NaN 2.3

#> $ : num [1:2] 0 -Inf

# look at inputs that failed

str(elements[!succeeded])

#> List of 1

#> $ : chr [1:26] "a" "b" "c" "d" ...

6.1.2 Handle conditions with tryCatch()

With tryCatch() you map conditions to handlers, named functions that are called with the condition as an input.

show_condition <- function(code) {

tryCatch(code,

error = function(c) print(c$message),

warning = function(c) print(c$message),

message = function(c) print(c$message)

)

}

show_condition(stop("Error-1"))

#> [1] "Error-1"

show_condition(warning("Warning-2"))

#> [1] "Warning-2"

show_condition(message("Message-3"))

#> [1] "Message-3\n"

# If no condition is captured, tryCatch returns the value of `code`

show_condition(10)

#> [1] 10

As well as returning default values when a condition is signalled, handlers can be used to make more informative error messages. For example, by modifying the message stored in the error condition object, the following function wraps read.csv() to add the file name to any errors:

read.csv2 <- function(file, ...) {

tryCatch(read.csv(file, ...), error = function(c) {

c$message <- paste0(c$message, " (in ", file, ")")

stop(c)

})

}

read.csv("code/dummy.csv")

#> Error in file(file, "rt"): cannot open the connection

read.csv2("code/dummy.csv")

#> Error in file(file, "rt"): cannot open the connection (in code/dummy.csv)

6.2 Defensive programming

A key principle of defensive programming is to “fail fast”: as soon as something wrong is discovered, signal an error. This is more work for the author of the function (you!), but it makes debugging easier for users because they get errors earlier rather than later, after unexpected input has passed through several functions.

In R, the “fail fast” principle is implemented in three ways:

- Be strict about what you accept.

- Avoid functions that use non-standard evaluation, like

subset,transform, andwith. - Avoid functions that return different types of output depending on their input.

- The two biggest offenders are

[andsapply(). - Whenever subsetting a data frame in a function, you should always use

drop = FALSE, otherwise you will accidentally convert 1-column data frames into vectors. - Similarly, never use

sapply()inside a function: always use the strictervapply()which will throw an error if the inputs are incorrect types and return the correct type of output even for zero-length inputs.

- The two biggest offenders are

There is a tension between interactive analysis and programming.

- When you’re working interactively, you want R to do what you mean. If it guesses wrong, you want to discover that right away so you can fix it.

- When you’re programming, you want functions that signal errors if anything is even slightly wrong or underspecified.

Keep this tension in mind when writing functions.

- If you’re writing functions to facilitate interactive data analysis, feel free to guess what the analyst wants and recover from minor misspecifications automatically.

- If you’re writing functions for programming, be strict. Never try to guess what the caller wants.

Part II. Functional programming

7. Functional programming

R, at its heart, is a functional programming (FP) language.

Then you’ll learn about the three building blocks of functional programming:

- anonymous functions,

- closures (functions written by functions), and

- lists of functions.

我们举一个 anonymous function + function vector 的例子:

x <- 1:10

# 基础版

summary <- function(x) {

c(mean(x, na.rm = TRUE),

median(x, na.rm = TRUE),

sd(x, na.rm = TRUE),

mad(x, na.rm = TRUE),

IQR(x, na.rm = TRUE))

}

# 高阶版

summary <- function(x) {

funs <- c(mean, median, sd, mad, IQR)

lapply(funs, function(f) f(x, na.rm = TRUE))

}

有点厉害。

7.1 Anonymous functions

In R, functions are objects in their own right. They aren’t automatically bound to a name. If you choose not to give the function a name, you get an anonymous function.

You can call an anonymous function without giving it a name, but the code is a little tricky to read:

# 定义了一个 function,body 是 "3()"

# 这里 "3" 被当做一个函数名,因为没有被调用,所以没有报错

# 一旦调用这个函数,就会报错,以为 "3" 不是一个合法的函数名

function(x) 3()

#> function(x) 3()

# With appropriate parenthesis, the function is called:

(function(x) 3)()

#> [1] 3

(function(x) x + 3)(10)

#> [1] 13

7.2 Closures

Closures are functions written by functions. Closures get their name because they enclose the environment of the parent function and can access all its variables. This is useful because it allows us to have two levels of parameters:

- a parent level that controls operation and

- a child level that does the work.

从上面这一段论述来看,好像被包的函数才叫 closure。

The following example uses this idea to generate a family of power functions in which a parent function (power()) creates two child functions (square() and cube()).

power <- function(exponent) {

function(x) {

x ^ exponent

}

}

square <- power(2)

square(2)

#> [1] 4

square(4)

#> [1] 16

cube <- power(3)

cube(2)

#> [1] 8

cube(4)

#> [1] 64

The difference between square() and cube() is their enclosing environments. One way to see the contents of the environment is to convert it to a list; Another way to see what’s going on is to use pryr::unenclose().

as.list(environment(square))

#> $exponent

#> [1] 2

as.list(environment(cube))

#> $exponent

#> [1] 3

library(pryr)

unenclose(square)

#> function (x)

#> {

#> x^2

#> }

unenclose(cube)

#> function (x)

#> {

#> x^3

#> }

The parent environment of a closure is the execution environment of the function that created it. The execution environment normally disappears after the function returns a value. However, when function outer returns function inner, function inner captures and stores the execution environment of function outer, and it doesn’t disappear.

- Primitive functions call C code directly and don’t have an associated environment.

我们也称 power() 这样生成 function 的 function 为 function factory。Function factories are most useful when:

- The different levels are more complex, with multiple arguments and complicated bodies.

- Some work only needs to be done once, when the function is generated.

7.3 Mutable state

Having variables at two levels allows you to maintain state across function invocations. This is possible because while the execution environment is refreshed every time, the enclosing environment is constant.

new_counter <- function() {

i <- 0

function() {

i <<- i + 1

i

}

}

counter_a <- new_counter()

counter_b <- new_counter()

counter_a()

#> [1] 1

counter_a()

#> [1] 2

counter_a()

#> [1] 3

counter_b()

#> [1] 1

counter_b()

#> [1] 2

counter_b()

#> [1] 3

是不是有点像 object 和 object member!

7.4 Lists of functions

起手式:

x <- 1:10

funs <- list(

sum = sum,

mean = mean,

median = median

)

lapply(funs, function(f) f(x))

#> $sum

#> [1] 55

#>

#> $mean

#> [1] 5.5

#>

#> $median

#> [1] 5.5

8. Functionals

The complement to a closure is a functional, a function that takes a function as an input.

8.1 My first functional: lapply()

- Input